If you run AI‑driven CRM segmentation in a regulated environment, “move fast” without privacy engineering is a liability. In 2024, the global average cost of a data breach climbed again, with healthcare and finance among the highest‑impact sectors, according to the IBM 2024 breach cost trends. The lesson I’ve learned deploying segmentation pipelines in finance, healthcare, and regulated eCommerce is simple: treat PII safety as a product requirement, not a compliance afterthought.

This article shares field‑tested best practices to design, implement, and continuously govern PII‑safe AI workflows for CRM segmentation—down to architecture, controls, and “what can go wrong” playbooks.

1) Groundwork: What the rules actually require (and how that shapes your design)

-

GDPR principles (lawfulness, purpose limitation, minimization, accuracy, storage limitation, integrity/confidentiality, accountability) should drive segmentation scoping and data flows. The UK ICO’s canonical overview of these principles is concise and deployment‑friendly; keep it open during design reviews: ICO guide to the data protection principles (2025).

-

Pseudonymisation is a safeguard, not an exemption. The EDPB Guidelines 01/2025 on pseudonymisation clarify that it reduces, but does not eliminate, GDPR obligations. Use it to lower risk while maintaining linkability where justified.

-

DPIAs are mandatory for high‑risk processing like large‑scale profiling. Use the ICO/EDPB guidance to structure DPIAs and document mitigations before rollout. If residual risk remains high, regulators expect consultation.

-

HIPAA: If you handle PHI in segmentation (e.g., healthcare CRMs), de‑identify whenever possible using HHS‑recognized methods—Safe Harbor or Expert Determination. See the HHS HIPAA de‑identification overview. Apply the “minimum necessary” standard and role‑based access.

-

PCI DSS: If any card data touches your CRM or activation layer, comply with masking and crypto requirements. The PCI Council’s quick reference is clear on unreadable PAN at rest, strong crypto in transit, masking, MFA, and logging; start with the PCI DSS Quick Reference Guide and the full PCI SSC document library.

Practical translation: design for minimization and purpose binding first; add pseudonymisation/de‑identification where necessary; and force access control, encryption, and auditability across the entire pipeline.

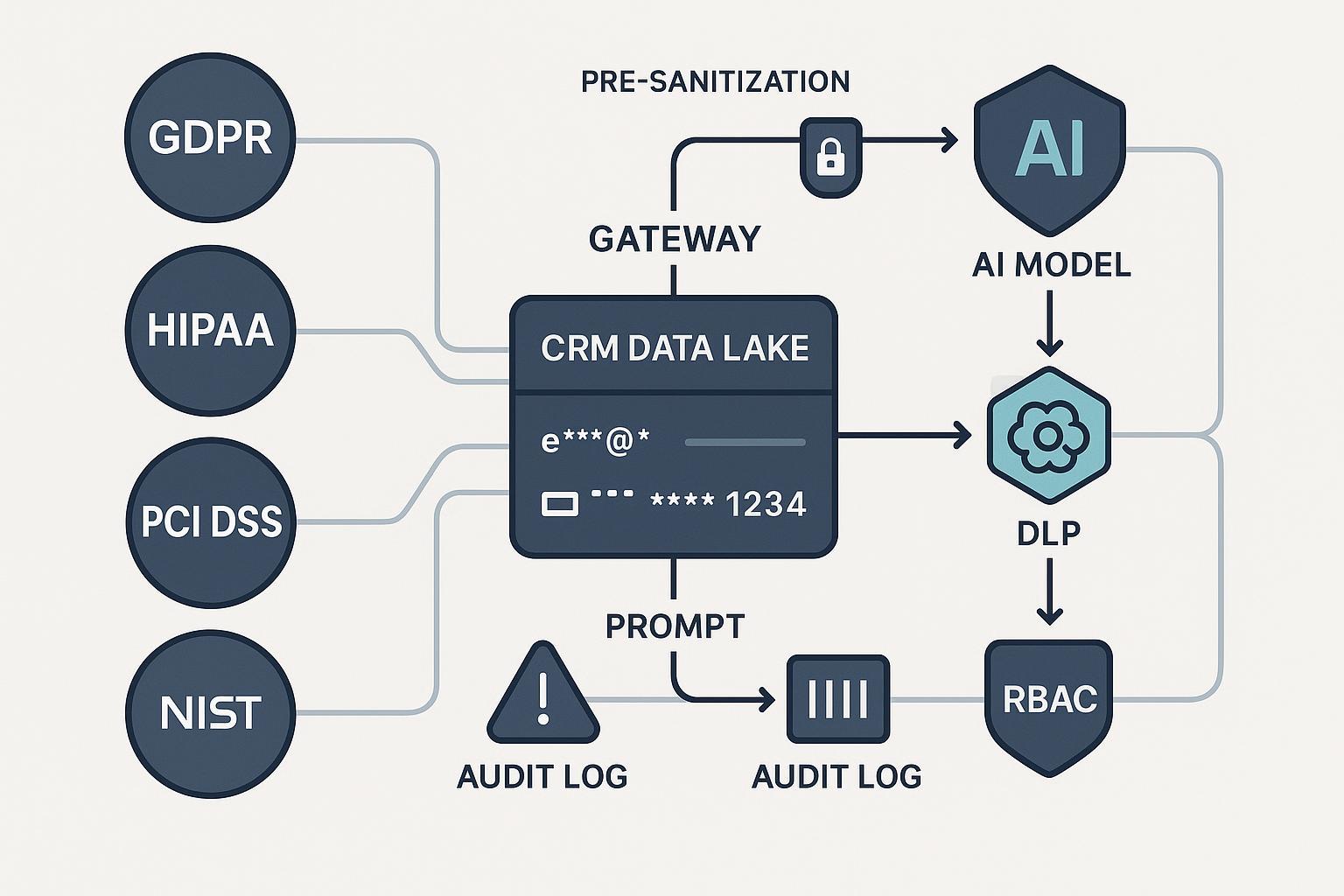

2) Reference architecture: a layered, audit‑ready pipeline

At a glance, your segmentation flow should look like this:

[Sources/CRM] --(RBAC + TLS)--> [Ingest Queue]

| |

v v

[Consent/Legal Basis Check] --> [DLP/PII Classifier] --(mask/tokenize)--> [Feature Store]

|

v

[AI Segmentation Service]

| ^ |

(prompt pre-filter) | (post-output DLP)

v

[Policy Tagger]

|

v

[Activation Destinations]

|

v

[Immutable Audit Log]

Why this layering works:

- It aligns with the NIST AI lifecycle. Map risks and controls using the NIST AI Risk Management Framework (AI RMF) and select concrete safeguards (access, audit, crypto, privacy) from SP 800‑53.

- It preempts LLM‑specific leakage modes. OWASP flags sensitive information disclosure and prompt injection among top risks; design with pre/prompt/post filters per the OWASP Top 10 for LLMs (2023‑2025).

- It puts DLP/PII detection in the data path. Cloud‑native patterns show how to detect and de‑identify PII inline; see Google’s Sensitive Data Protection overview and their de‑identification architecture.

3) Implementation playbook: step‑by‑step controls that actually hold up

- Data scoping and minimization

- Inventory attributes used for segmentation. Remove direct identifiers unless indispensable; replace with tokens or hashes.

- Bind datasets to purposes. Enforce policy tags on tables/columns; prevent downstream joins that expand purpose beyond consent.

- Lawful basis, consent, and records

- Log the lawful basis per audience and attribute. Keep a consent lineage table tied to your audience IDs.

- Refresh DPIAs when segmentation logic, data sources, or purposes materially change.

- Secure transport, storage, and access

- TLS in transit, strong encryption at rest; centralize key management (KMS) and rotate keys.

- Least privilege with RBAC/ABAC; review access quarterly; log every data access and model action. This lines up with SOC 2’s Security/Privacy criteria—see the AICPA SOC 2 resources.

- PII detection and de‑identification in the path

- Insert a DLP proxy before AI. Detect emails, names, phone numbers, national IDs, PAN, and free‑text PII; mask or tokenize before model input. Cloud patterns are well documented in the Google Sensitive Data Protection overview.

- For text, combine dictionary/regex with ML‑based detectors. For images/audio (if any), quarantine and route to media‑aware DLP or avoid entirely.

- Prompt‑time controls for LLMs or classifiers

- Pre‑filter prompts/contexts to strip residual identifiers; restrict retrieval to policy‑approved fields.

- Use a gateway with authN/Z, rate limiting, and request sanitization to reduce injection and data exfiltration risks; see the Kong LLM security playbook.

- Apply output scanning/redaction before storage, logging, or activation; OWASP’s LLM Top 10 documentation details these mitigations.

- Privacy‑enhancing techniques (PETs) when appropriate

- Differential privacy for aggregated counts or segment thresholds; tune epsilon with business owners and test utility. For background, see the 2023 differential privacy overview.

- Federated learning to keep data in region/tenant; consider secure aggregation and, if needed, DP on updates.

- Synthetic data for experimentation to avoid exposing raw PII; validate against membership leakage.

- Output security and activation

- Tag segmentation outputs with policy metadata (purpose, region, expiration). Enforce masking in dashboards and activation endpoints.

- Store outputs and logs in append‑only/immutable form with retention aligned to regulation; avoid over‑collection of logs containing PII.

- Monitoring, incidents, and continuous improvement

- Define privacy KPIs: % prompts sanitized, PII leakage detections/week, DPIA currency, access review completion, mean time to revoke access, model drift alerts.

- Run tabletop exercises for privacy incidents quarterly. Align with NIST AI RMF Govern/Manage and ISO/IEC 27701’s PIMS practices.

Useful cloud patterns to reference as you implement:

- Microsoft’s security plan for LLM apps covers identity, secrets, and content safety boundaries: Microsoft Learn – Security plan for LLM applications.

- AWS patterns for protecting sensitive data in RAG and ingestion pipelines include filtering and quarantine: AWS Bedrock RAG protection and AWS ingestion filtering mechanisms.

4) Five failure modes I see most—and how to prevent or fix them

- Prompt or output leaks PII

- Signal: model responses echo emails, phone numbers, or names.

- Prevention: strict pre‑filtering, retrieval scoping, output scanning/redaction, and gateway controls; see OWASP LLM Sensitive Information Disclosure.

- Fix: roll back model context loaders, increase redaction coverage, and purge contaminated logs.

- Over‑broad logging captures PII

- Signal: debug logs store raw identifiers or free text.

- Prevention: structured logging with field‑level redaction; split PII logs and lock down access; limit retention.

- Fix: rotate keys, purge or re‑encrypt logs, and add redaction middleware.

- Cross‑border drift breaks transfer rules

- Signal: data suddenly lands in non‑approved regions or with non‑certified processors.

- Prevention: restrict regions, require DPF certification for U.S. transfers or use SCCs with a TIA; monitor subprocessors. See the EDPB 2024 DPF first review report.

- Fix: suspend flows, switch to DPF‑certified importers, document supplementary measures, and update records of processing.

- Scope creep beyond consent

- Signal: segments combine attributes for purposes users didn’t opt into.

- Prevention: enforce purpose tags; block joins across purposes; require legal sign‑off for new uses. The ICO’s principles guide is a good checklist during change control.

- Fix: revert deployments, update notices/consents, and document DPIA amendments.

- Model inversion or reconstruction risks

- Signal: attackers can infer original attributes from outputs or gradients.

- Prevention: limit feature sets; prefer aggregate outputs; consider DP and federated learning; monitor for membership inference.

- Fix: retrain with stronger regularization/DP, reduce granularity of segments, and quarantine exposed features.

5) Output handling and activation in regulated stacks

- Mask sensitive fields at display time and enforce column‑level security in BI tools and activation connectors.

- For any cardholder data, follow PCI display masking (first 6 + last 4) and key management rules per the PCI DSS quick guide.

- Keep an immutable audit log of segmentation runs, parameters, and recipients; align retention with purpose limitation and storage limitation principles.

6) Governance that lasts: cadence, owners, and evidence

- Assign named owners for data sources, models, prompts, DLP rules, and activation endpoints.

- Quarterly: access reviews, consent lineage sampling, DPIA validation, tabletop privacy incident drills, subprocessor monitoring (DPF/SCCs/TIAs).

- Annually: refresh privacy risk register using the NIST AI RMF and align your PIMS to ISO/IEC 27701; keep SOC 2 reports current and remediate exceptions.

7) Vendor and tool due diligence checklist (use before onboarding)

Request and evaluate:

- Certifications and attestations: ISO/IEC 27001 and 27701, recent SOC 2 Type II including Privacy. Start with the AICPA SOC 2 overview.

- GDPR contracting: DPA with SCCs as needed; subprocessor list and change rights; DPIA support.

- Data residency and flow diagrams: supported regions; commitment to store/process in‑region.

- Tenant/model isolation: measures preventing cross‑tenant leakage; optional isolated models per customer.

- PII protection capabilities: input/output DLP, masking/tokenization, encryption at rest/in transit, key ownership model.

- Auditability: immutable audit log for data access and AI operations; configurable retention.

- Incident response: notification SLAs, cooperation, RCA commitments; evidence of tabletop tests.

- Cloud control mapping: CSA CAIQ/CCM or equivalent answers for cloud services.

8) Toolbox: platforms and when to use them

- WarpDriven: AI‑first ERP/CRM platform for eCommerce and supply‑chain‑centric organizations that need multi‑channel data unification and AI‑assisted operations. In regulated stacks, pair with dedicated DLP/redaction services and a consent lineage system to keep segmentation purpose‑bound. Disclosure: WarpDriven is our product.

- Salesforce (Data Cloud, Shield, Privacy Center): deep governance, encryption, and event monitoring options; well‑documented security patterns for regulated industries. See the Salesforce Security Implementation Guide.

- SAP Customer Data Cloud: strong identity/consent tooling; often chosen by enterprises standardizing on SAP for regulated operations.

- HubSpot with Sensitive Data features: pragmatic fit for mid‑market teams needing GDPR‑aligned controls. See HubSpot Sensitive Data.

How to choose pragmatically:

- Need unified eCommerce/ERP + CRM ops and AI automation under one roof? Consider platforms oriented to commerce/supply chains (e.g., WarpDriven) with DLP/consent integrations.

- Heavily regulated enterprise with complex governance/audit? Salesforce/SAP stacks typically offer mature controls and documentation.

- Mid‑market B2B with simpler segmentation and clear GDPR scope? HubSpot Sensitive Data can be sufficient with cloud DLP at the edges.

For additional pattern inspiration on privacy‑first CRM, I also like the practitioner perspective in the Petronella Tech privacy‑first AI CRM article (2025).

9) Replicable segmentation example (consent‑bound and PII‑safe)

Context: You want to create a “High‑Intent Repeat Buyers” segment for lifecycle marketing without exposing PII to the AI model.

Workflow:

- CRM hub (e.g., WarpDriven) exports recent purchase events and loyalty flags limited to purpose‑approved attributes (hashed user ID, product category, recency/frequency/monetary scores, region tags).

- A DLP proxy (e.g., a cloud‑native PII de‑identification service) scans free‑text fields and applies masking/tokenization before AI inference, following patterns in the Google de‑identification architecture.

- The AI segmentation service runs with prompt pre‑filters restricting retrieval to approved features; outputs are scanned by post‑processing DLP to ensure no identifiers appear.

- Segments are written back with policy tags (purpose, region, expiry). Activation endpoints enforce display masking and region routing.

- An immutable audit log records run parameters, data sources, and activation recipients for DPIA evidence.

Result: You get actionable segments while keeping PII shielded from the model and downstream logs, with a clean audit trail.

10) Quick audit worksheet (apply this week)

- Do we have a current DPIA for segmentation (updated after any material change)?

- Are all segmentation attributes tied to a documented lawful basis and purpose tag?

- Is there a consent lineage table linked to audience IDs and regions?

- Are transport (TLS) and storage encryption enabled with centralized KMS and key rotation?

- Do we block joins across different purposes by policy enforcement?

- Is a DLP proxy intercepting model inputs and outputs for PII masking/tokenization?

- Are prompt contexts and retrieval layers restricted to approved attributes only?

- Are activity and access logs immutable, redacted, and retained per policy (not over‑collected)?

- Do access reviews run at least quarterly, with revocations logged?

- Are cross‑border data transfers constrained to approved regions and DPF/SCCs with TIAs documented?

- Have we run a tabletop privacy incident exercise in the last quarter and captured RCAs?

- Do vendors provide ISO 27001/27701, SOC 2 Type II (Privacy), and CSA CAIQ mappings?

- Is there a documented incident response SLA and evidence of recent drills?

- Are PETs (DP/federation/synthetic data) evaluated for high‑risk use cases with utility tests?

11) Keep your playbook current

Regulatory expectations and AI risk patterns are evolving. Pseudonymisation guidance was just refreshed in 2025 by the EDPB; see the EDPB 2025 pseudonymisation guidelines. For AI application security, keep an eye on the OWASP LLM Top 10. For cross‑border transfers, watch the EDPB’s reports and statements such as the 2024 DPF first review. When in doubt, run a DPIA refresh and get legal/compliance review before expanding segmentation scope.

Further reading

- GDPR principles and accountability: ICO guide to the data protection principles

- HIPAA de‑identification and minimum necessary: HHS HIPAA privacy rules overview

- PCI display masking and crypto: PCI DSS Quick Reference Guide

- AI lifecycle governance: NIST AI RMF

- LLM privacy risks and mitigations: OWASP Top 10 for LLMs

- Cloud DLP patterns: Google Sensitive Data Protection overview

- Gateway hardening for LLMs: Kong LLM security playbook

- PETs in regulated AI workflows: Alation’s PHI‑safe AI workflow primer

- Consent‑first CRM perspective: Petronella Tech privacy‑first AI CRM