Part I — Why “LLM-ready” taxonomies matter

If you’ve ever asked an LLM to “show weekly conversion rate by category and campaign” and received five different SQLs for the same metric, you’ve felt the pain of ambiguous data. The fix isn’t a bigger model—it’s better structure. An LLM-ready taxonomy makes product catalogs, user behavior, and metrics unambiguous so natural-language analytics becomes trustworthy.

In practice, that means:

- Your catalog has stable IDs and consistent hierarchies that map to external standards (so meaning travels across channels and tools).

- Your events carry the right item-level attributes every time (so metrics don’t drift when contexts change).

- Your semantic layer defines metrics once, in code, and everything—dashboards, notebooks, and LLMs—queries through it.

- Your governance enforces contracts, versioning, and validation before data reaches humans or models.

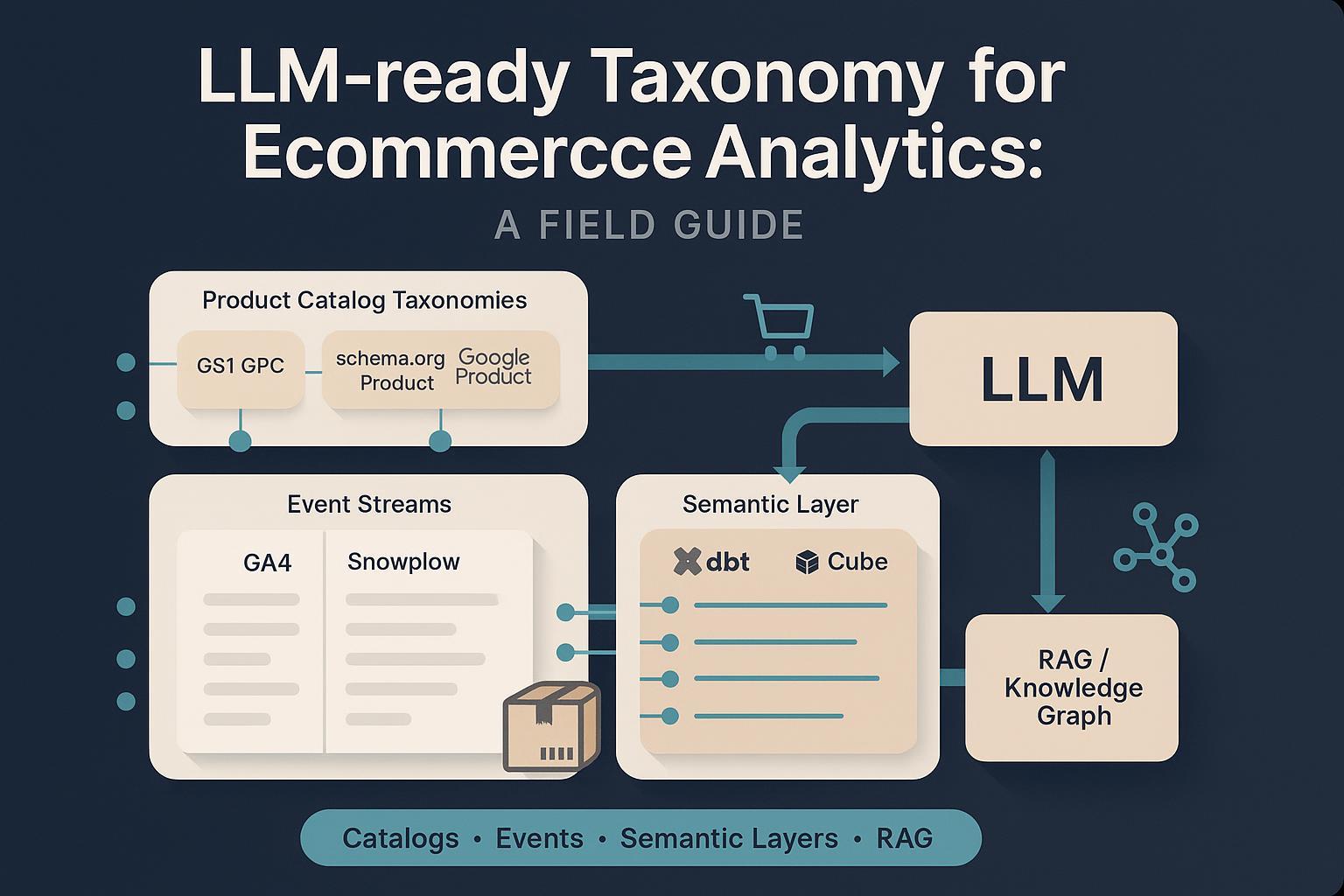

This guide stitches together proven standards—GS1 GPC, schema.org Product, Google Product Taxonomy—with analytics event taxonomies (GA4, Snowplow), semantic layers (dbt, Cube), and RAG/knowledge-graph patterns to give LLMs a safe, reliable interface. Where relevant, we anchor guidance to canonical documentation such as the GS1 overview of Global Product Classification and browser tools for hierarchies and attributes, the schema.org Product definitions and releases, Google Merchant Center’s product category rules and downloadable taxonomy, GA4’s ecommerce events and item-scoped parameters, Snowplow’s ecommerce tracking and Iglu schemas, the dbt Semantic Layer/MetricFlow references, and Cube’s semantic modeling and AI API materials.

Part II — Catalog foundations: standards and crosswalks

An LLM can’t guess what “Handbags” means in your warehouse if you call it “Bags—Women’s” in ads and “HB” in your BI tool. You need a stable spine with crosswalks.

1) GS1 GPC as the neutral backbone

GS1’s Global Product Classification (GPC) is a four-level hierarchy—Segment > Family > Class > Brick—where each Brick has a code and attributes that describe product characteristics. The official GS1 overview explains the purpose and structure, and you can explore categories via the GS1 Navigator or the GPC Browser. Aligning at least to Brick-level where relevant makes your taxonomy interoperable with trading partners and GDSN.

- Learn more in the GS1 “What is GPC” overview (2024) and browse hierarchies via the GS1 Navigator.

Practical advice:

- Don’t replace your internal categories with GPC; map them. Keep stable internal category IDs and maintain a crosswalk table to GPC Brick codes.

- Use GPC Brick Attributes to inform canonical product attributes (e.g., material, size system) where applicable.

2) schema.org Product for web and knowledge consistency

Use schema.org to standardize identifiers and commercial attributes on your website. The Product type includes identifiers (sku, gtin8/12/13/14), brand, category, and Offer details (price, currency, availability). The schema.org releases page documents ongoing updates; always check the latest version when modeling variants and relationships.

- See the schema.org Product type in the latest release and the release notes:

When you expose a consistent JSON-LD block on PDPs, you create a machine-readable truth that both search engines and your own LLM pipelines can ingest.

Example (JSON-LD) with identifiers, variant linkage, and offers:

{

"@context": "https://schema.org/",

"@type": "Product",

"name": "Executive Anvil",

"image": [

"https://example.com/photos/1x1/photo.jpg",

"https://example.com/photos/4x3/photo.jpg",

"https://example.com/photos/16x9/photo.jpg"

],

"description": "Sleek executive anvil for all your business needs.",

"sku": "0446310786",

"gtin13": "0123456789012",

"brand": {"@type": "Brand", "name": "Acme"},

"category": "Tools > Anvils",

"color": "Black",

"size": "Medium",

"offers": {

"@type": "Offer",

"url": "https://example.com/executive-anvil",

"priceCurrency": "USD",

"price": "119.99",

"availability": "https://schema.org/InStock",

"itemCondition": "https://schema.org/NewCondition"

},

"isVariantOf": {"@type": "Product", "name": "Anvil"}

}

Tips that LLMs love:

- Always include one of GTIN fields and a stable SKU; include brand and canonical URL.

- Use isVariantOf/hasVariant to link variants to a parent model (e.g., color/size variants).

3) Google Product Taxonomy (GPT) for feeds and ads

Google expects one most-specific google_product_category per product in Merchant Center feeds, while product_type carries your own hierarchy for campaign organization. The official Help docs explain usage, and you can download the taxonomy TXT with IDs to ensure accurate mapping.

Notes:

- Do not confuse schema.org Product category with google_product_category—they’re separate systems. Follow Merchant Center updates via the announcements hub when changes may affect feeds.

4) Optional: IAB Content Taxonomy for media context

If your use case spans content or contextual advertising (e.g., marketplace editorial, UGC, retail media), the IAB Tech Lab Content Taxonomy standardizes content topics and brand safety flags. Use IDs and version markers consistently (e.g., OpenRTB cattax fields).

5) The crosswalk model (catalog → standards → analytics)

Create a crosswalk layer that maps your internal category IDs to:

- GS1 GPC Brick code (where relevant)

- Google Product Taxonomy category ID

- Optional IAB content category ID (for media/editorial)

Keep this mapping table versioned. Your semantic layer will later join it to events for consistent rollups.

A minimal crosswalk table schema:

CREATE TABLE taxonomy_crosswalk (

internal_category_id STRING PRIMARY KEY,

internal_category_path STRING, -- e.g., Apparel > Women > Handbags

gpc_brick_code STRING, -- e.g., 10005678

google_category_id INT64, -- from taxonomy-with-ids.txt

iab_content_cat_id STRING, -- optional

valid_from DATE,

valid_to DATE

);

Part III — Event taxonomy for behavior: GA4 vs. Snowplow

Good catalogs without good events lead to “unknown variant,” “null price,” or “which list was this from?” dead ends. Your event taxonomy must carry item-level attributes and promotional/list context.

GA4 recommended ecommerce events

Google documents a standard set of ecommerce events and item-scoped parameters. At a minimum, implement view_item_list, select_item, add_to_cart, begin_checkout, add_payment_info, add_shipping_info, purchase, refund, and promotion events, with the items[] array fully populated. See Google’s GA4 ecommerce setup and item-scoped parameter docs for canonical definitions and validation steps.

- GA4 — Ecommerce setup and events

- GA4 — Event parameters reference

- GA4 — Item-scoped ecommerce parameters

- GA4 — Validate ecommerce implementation

Example purchase payload (gtag.js style), annotated:

gtag('event', 'purchase', {

transaction_id: 'T_12345',

affiliation: 'Online Store',

value: 72.05,

currency: 'USD',

tax: 4.90,

shipping: 5.99,

coupon: 'SUMMER_SALE',

items: [

{

item_id: 'SKU_12345', // stable SKU or GTIN

item_name: 'Canvas Tote',

item_brand: 'ACME',

item_category: 'Accessories',

item_category2: 'Bags',

item_category3: 'Totes',

item_variant: 'Blue',

price: 15.25,

quantity: 1,

item_list_id: 'home_rec_1',

item_list_name: 'Homepage Recommendations'

},

{

item_id: 'SKU_12346',

item_name: 'Leather Satchel',

item_brand: 'ACME',

item_category: 'Accessories',

item_category2: 'Bags',

item_category3: 'Satchels',

item_variant: 'Brown',

price: 20.00,

quantity: 2

}

]

});

Implementation tips:

- Use item_category through item_category5 to carry the full internal category path. Keep IDs in parallel custom parameters if you want stable joins.

- Always send price and quantity at item-level; otherwise AOV, GMV, and margin calculations drift.

- Be explicit with promotion/list context using item_list_id/item_list_name and promotion parameters.

Privacy note: GA4 prohibits sending PII such as email or phone. Google’s policy and data redaction features are described in the 2024 GA4 documentation; use pseudonymous user_id and configure retention/consent appropriately.

- Google Analytics — PII prohibition & redaction (2024)

- GA4 — IP handling and retention controls (2024)

Snowplow ecommerce tracking

If you need more flexible schemas and warehouse-ready data with rich contexts, Snowplow’s self-describing events and context entities are a strong option. The web tracker’s ecommerce plugin represents actions (e.g., add_to_cart, purchase) with attached product/cart contexts, all validated against Iglu schemas.

- Snowplow — Ecommerce tracking (official docs)

- Example Iglu schemas for ecommerce domain:

Example custom self-describing event with contexts:

window.snowplow('trackSelfDescribingEvent', {

event: {

schema: 'iglu:com.acme/viewed_product/jsonschema/2-0-0',

data: { productId: 'ASO01043', category: 'Dresses', brand: 'ACME', price: 49.95 }

},

context: [

{

schema: 'iglu:com.acme/cart/jsonschema/1-0-0',

data: { cartId: 'cart123', totalValue: 150.00 }

}

]

});

Modeling tip: Use Snowplow’s ecommerce dbt package to transform raw events into standardized tables for analysis.

Part IV — The semantic layer: define metrics once

LLMs should never write SQL directly against raw fact tables. A semantic layer defines metrics and dimensions once, enforces joins/filters, and exposes a safe API for humans and models.

Two common approaches: the dbt Semantic Layer (MetricFlow) and the Cube universal semantic layer.

dbt Semantic Layer (MetricFlow)

dbt’s Semantic Layer lets you declare semantic models (entities, dimensions, measures) and derive metrics (ratios, cumulative windows). The official documentation covers architecture, semantic models, and metric types, including limitations and API access.

- dbt — Semantic Layer overview

- dbt — SL architecture

- dbt — Semantic models

- dbt — Metrics overview (ratios, derived)

- dbt — Cumulative/rolling metrics

- dbt — SL FAQs and constraints

Example: defining base measures and common ecommerce metrics (YAML):

semantic_models:

- name: orders

model: ref('fct_orders')

entities:

- name: order_id

type: primary

- name: user_id

type: foreign

defaults:

agg_time_dimension: order_date

measures:

- name: revenue

agg: sum

expr: total_amount

- name: orders

agg: count_distinct

expr: order_id

dimensions:

- name: channel

type: categorical

- name: device

type: categorical

- name: category_id

type: categorical

metrics:

- name: gmv

type: simple

type_params:

measure: revenue

- name: aov

type: ratio

type_params:

numerator: revenue

denominator: orders

- name: conversion_rate

type: ratio

type_params:

numerator: orders

denominator: sessions

- name: refund_rate

type: ratio

type_params:

numerator: refunded_orders

denominator: orders

- name: wau_rolling_7

type: cumulative

type_params:

measure:

name: active_users

fill_nulls_with: 0

join_to_timespine: true

cumulative_type_params:

window: 7 days

Notes for LLM safety:

- Require a primary time dimension for measures; define default grains.

- Model dimensional conformance (e.g., category crosswalk joins) inside the semantic layer.

- Include human-readable descriptions in YAML; index these into your RAG store.

Cube Universal Semantic Layer

Cube models data as cubes with measures, dimensions, and joins, and compiles to optimized SQL across warehouses. It exposes SQL, REST, and GraphQL APIs, plus an AI API for natural-language queries.

Example Cube model (JavaScript-style config):

cube('Orders', {

sql: `SELECT * FROM analytics.fct_orders`,

measures: {

revenue: { sql: 'total_amount', type: 'sum' },

orders: { sql: 'order_id', type: 'countDistinct' },

aov: { sql: 'total_amount', type: 'number', drillMembers: ['order_id'],

format: 'currency', title: 'Average Order Value',

// Computed in query layer: revenue / orders

}

},

dimensions: {

order_id: { sql: 'order_id', type: 'string', primaryKey: true },

order_date: { sql: 'order_date', type: 'time' },

channel: { sql: 'channel', type: 'string' },

device: { sql: 'device', type: 'string' },

category_id: { sql: 'category_id', type: 'string' }

},

joins: {

Categories: {

relationship: 'belongsTo',

sql: `${CUBE}.category_id = ${Categories}.internal_category_id`

}

}

});

Tools/Stack (neutral)

Disclosure: WarpDriven is our product.

- ERP/Commerce Ops (upstream of analytics): WarpDriven — AI-first ERP/operations platform that unifies catalog, orders, inventory, logistics; can feed governed data into your semantic layer. Peers: Oracle NetSuite, SAP S/4HANA Public Cloud, Shopify.

- Semantic layer: dbt Semantic Layer; Cube.

- Event tracking: GA4; Snowplow.

(We keep stack mentions neutral and focus on conceptual fit; choose tools based on data volume, governance needs, and team skills.)

Part V — Governance: data contracts, CI tests, lineage, versioning

A taxonomy that changes silently will break LLMs. Governance keeps structure stable and transparent.

Data contracts

A data contract is a declarative spec that defines what a dataset or event guarantees: schema, constraints, SLAs, and ownership. ThoughtWorks has advocated templates for platform DX and contract tests for pipelines in recent years.

- ThoughtWorks — Data mesh platform DX and contracts (2024)

- ThoughtWorks — Testing data pipelines with contract tests

- ThoughtWorks — Technology Radar Vol. 31 (2024) mentions templates

Example data contract (YAML) for an orders dataset:

version: 1

owner: analytics-platform@acme.com

name: analytics.fct_orders

sla:

freshness_minutes: 60

availability_percent: 99.5

schema:

primary_key: order_id

columns:

- name: order_id

type: STRING

constraints: [not_null, unique]

- name: user_id

type: STRING

constraints: [not_null]

pii: false

- name: order_date

type: TIMESTAMP

constraints: [not_null]

- name: total_amount

type: NUMERIC

constraints: [not_null]

- name: currency

type: STRING

constraints: [not_null]

compatibility:

on_breaking_change: create_new_version

deprecation_period_days: 90

lineage:

upstream: [raw.orders_stream, dim_users]

downstream: [semantic.orders_model]

validation:

checks:

- type: schema_match

- type: freshness

- type: completeness

- type: range

column: total_amount

min: 0

privacy:

prohibited_fields: [email, phone]

pseudonymous_ids: [user_id]

Validation in CI/CD

Automate checks in your pipeline so violations block deploys:

- dbt tests for not_null, unique, accepted_values on models.

- Great Expectations or Soda for distribution checks, freshness, and custom constraints.

- OpenLineage for lineage capture and impact analysis.

References:

Versioning and rollback

- Version your taxonomy (catalog trees, crosswalk tables) and contracts; store history with valid_from/valid_to.

- Adopt a compatibility policy (e.g., only additive changes are non-breaking; removals require new versions and backfills).

- Maintain rollback scripts for the semantic layer to revert metric definitions if a change causes drift.

Part VI — LLM integration: RAG, knowledge graphs, and safe prompting

Even with a semantic layer, LLMs need context to choose the right metrics and dimensions and to respect business rules. Retrieval-Augmented Generation (RAG) and, for multi-hop logic, GraphRAG help LLMs ground answers.

Retrieval-Augmented Generation (RAG)

RAG retrieves relevant documents—metric definitions, taxonomy glossaries, data contracts—and feeds them into the model. IBM’s 2024 overview explains how grounding improves factuality and governance in enterprise setups, including deployment considerations like access control.

Practical RAG setup for analytics:

- Index: semantic layer YAML (with descriptions), your metric dictionary, taxonomy crosswalk documentation, and event schemas.

- Chunking: keep semantic model + metrics in small, coherent chunks (e.g., per metric, per dimension) to avoid context overload.

- Metadata: tag chunks by domain (catalog, events, metrics), version, and owner for filtering.

Knowledge graphs and GraphRAG

Knowledge graphs encode entities (Products, Categories, Brands, Promotions, Channels) and relationships (belongs_to, promoted_in, supplied_by). GraphRAG combines graph traversal with vector search for multi-hop, explainable retrieval—useful for questions like “Which promotions drove margin lift for Women’s Handbags in EU marketplaces last month?”

Design notes:

- Build the graph from deterministic sources (catalog DB, promotion system) to ensure traceability; reserve LLM extraction for weak signals and human-review them.

- Return citations and subgraph paths alongside answers to increase trust.

Safe prompting and system rules

- System message: “Only query via the semantic layer. Never write SQL against raw tables.”

- Tools: Provide the LLM a “metrics browser” tool to discover metrics/dimensions; route natural language to metric queries, not ad hoc SQL.

- Guardrails: Require the model to return the metric definition names it used (e.g., conversion_rate, aov) for auditing.

Part VII — 80/20 quick-start: the minimum viable, LLM-ready stack

You can ship a working, reliable v1 in two sprints by focusing on a minimal but complete path from catalog to LLM.

A. Catalog MVP

- Internal category tree: 3–4 levels deep with stable IDs.

- Crosswalk table to Google Product Taxonomy ID; optional GPC Brick for critical categories.

- schema.org Product markup on PDPs: sku, gtin, brand, category path, offers (price, currency, availability), isVariantOf.

B. Event MVP (GA4 or Snowplow)

- GA4: Implement view_item_list, select_item, add_to_cart, begin_checkout, purchase, refund. Populate items[] fully with item_id, item_brand, item_category1–5, item_variant, price, quantity, item_list_id/name.

- Use GA4’s item-scoped parameter definitions to ensure parity across events.

- Snowplow: Use the ecommerce plugin and attach product/cart contexts; validate against Iglu schemas.

C. Semantic layer MVP

- Choose one (dbt SL or Cube) and define 10–15 canonical metrics:

- GMV (sum revenue), AOV (revenue/orders), conversion_rate (orders/sessions), refund_rate, add_to_cart_rate, checkout_start_rate, repeat_purchase_rate, inventory_turns (if available), promotion_lift.

- Conform dimensions: channel, device, category (via crosswalk), campaign, promotion, geo, time.

D. Governance MVP

- Data contract for orders and events tables; CI checks (schema, not_null, freshness, PII guards).

- Versioned crosswalk table with valid_from/valid_to.

E. LLM MVP

- RAG index containing: semantic YAML, metric dictionary, taxonomy crosswalk docs, GA4/Snowplow schema notes.

- System prompt enforcing “use semantic layer only.”

- Evaluation set: 20–30 canonical questions; target ≤5% correction rate after iteration.

Part VIII — Advanced extensions

- Snowplow deep contexts: attach promotions, impressions, and checkout step contexts; use Snowplow’s dbt models for richer path analysis.

- Knowledge graph: Build a graph of Products–Categories–Brands–Promotions–Channels and expose graph queries beside vector search for GraphRAG.

- Multi-catalog mapping: Maintain crosswalks among GS1 GPC Brick, internal categories, Google Product Taxonomy, and IAB content categories for retail media.

- Multilingual aliasing: Store canonical category IDs with localized labels and synonyms; let LLMs map user queries in different languages to the same IDs.

- Subscriptions/returns: Extend your event taxonomy with refund and return reasons; model cohort metrics (retention, churn) in the semantic layer.

Part IX — Anti-patterns and failure modes (and what to do instead)

- Taxonomy sprawl: Marketing renames categories without ID stability.

- Fix: Use stable internal_category_id keys; treat labels as translatable attributes. Enforce changes through versioned crosswalks.

- Missing item attributes in events: No price/quantity or variant = wrong GMV and AOV.

- Fix: Add validation that purchase/add_to_cart events must have price and quantity; block deployments if missing.

- Overloading custom parameters: 50 different meanings for one “custom_dimension_1”.

- Fix: Maintain a governed parameter registry; prefer standard fields (GA4 items[]). Document any custom fields with owners and tests.

- Letting LLMs hit raw tables.

- Fix: Force all queries through the semantic layer; expose only approved metrics/dimensions to the LLM tool.

- Evolving taxonomy without versioning/backfills.

- Fix: Use valid_from/valid_to on crosswalks and backfill historical mappings; maintain deprecation periods.

- PII leakage in analytics payloads.

- Fix: Follow GA4’s PII prohibition and IP/retention controls; send only pseudonymous user_id; implement redaction and consent mode as needed.

- “Category by label” joins.

- Fix: Join on IDs, not names. Carry both ID and label in your event payloads or enrich in the warehouse.

Part X — Validation checklist and resources

Use this checklist as a preflight before inviting LLMs to your data.

Catalog & crosswalks

- Internal category tree has stable IDs and 3–4 levels for 80/20 coverage.

- Crosswalk table joins internal IDs to Google Product Taxonomy ID; optional GPC Brick for priority categories.

- PDPs expose schema.org Product JSON-LD with sku, gtin, brand, category, offers, isVariantOf.

Events

- GA4 events implemented with complete items[] (id, brand, category1–5, variant, price, quantity, list context), or Snowplow ecommerce with product/cart contexts validated by Iglu schemas.

Semantic layer

- 10–15 canonical metrics defined; dimensions conformed and documented; default time grains set.

Governance

- Data contracts exist for core datasets; CI checks (schema, freshness, completeness, PII guards) pass in pipelines.

LLM enablement

- RAG index contains semantic YAML, metric dictionary, taxonomy glossary, and event schemas; metadata includes version and owner.

- For advanced cases, a knowledge graph stores product-category-promotion relationships; GraphRAG retrieves subgraphs with citations.

Merchant feeds & standards

- Merchant Center feeds set google_product_category by ID and maintain product_type for internal hierarchy; taxonomy updates monitored.

- Optional GS1 mapping for partner alignment; Brick-level attributes inform canonical attributes.

Appendix — Sample schemas you can copy

- GA4 item-level parameter registry (warehouse side)

CREATE TABLE staging.ga4_items (

event_timestamp TIMESTAMP,

event_name STRING,

transaction_id STRING,

item_id STRING,

item_name STRING,

item_brand STRING,

item_category STRING,

item_category2 STRING,

item_category3 STRING,

item_category4 STRING,

item_category5 STRING,

item_variant STRING,

price NUMERIC,

quantity INT64,

item_list_id STRING,

item_list_name STRING

);

- dbt test examples (schema.yml)

version: 2

models:

- name: fct_orders

columns:

- name: order_id

tests: [not_null, unique]

- name: user_id

tests: [not_null]

- name: total_amount

tests:

- not_null

- relationships:

to: ref('dim_currency')

field: currency

- SodaCL checks (soda.yml)

checks for fct_orders:

- row_count > 0

- freshness(order_date) < 2h

- missing_count(order_id) = 0

- invalid_percent(total_amount) < 0.1 %

- schema:

warn:

when schema changes: any

- Minimal knowledge index (for RAG)

{

"doc_id": "metric:aov",

"title": "Average Order Value",

"body": "AOV = revenue / orders. revenue is sum(total_amount) on fct_orders; orders is count_distinct(order_id).",

"metadata": {"domain": "metrics", "version": "1.3", "owner": "analytics"}

}

- Crosswalk enrichment SQL (semantic layer staging)

SELECT

o.order_id,

o.order_date,

o.total_amount,

cx.google_category_id,

cx.internal_category_id,

cx.internal_category_path

FROM analytics.fct_orders o

LEFT JOIN analytics.taxonomy_crosswalk cx

ON o.category_id = cx.internal_category_id

AND o.order_date BETWEEN cx.valid_from AND COALESCE(cx.valid_to, DATE '9999-12-31');

Closing thoughts

In my experience, teams that treat taxonomy as a product—complete with contracts, versions, and documentation—unlock reliable LLM analytics much faster than those who chase prompts. Give your models a consistent world to reason about, and they’ll return the favor with consistent answers.