Executive summary

- False declines are a large, under-measured drag on growth. Merchants typically reject around 6% of ecommerce orders, and an estimated 2–10% of those declines are actually good customers—meaning conversion and LTV leak even when fraud appears “under control,” as summarized by Fiserv’s Carat false decline explainer (2024–2025) and other industry taxonomies. See the concise overview in Carat/Fiserv False Decline explainer and TrustDecision’s false decline definition.

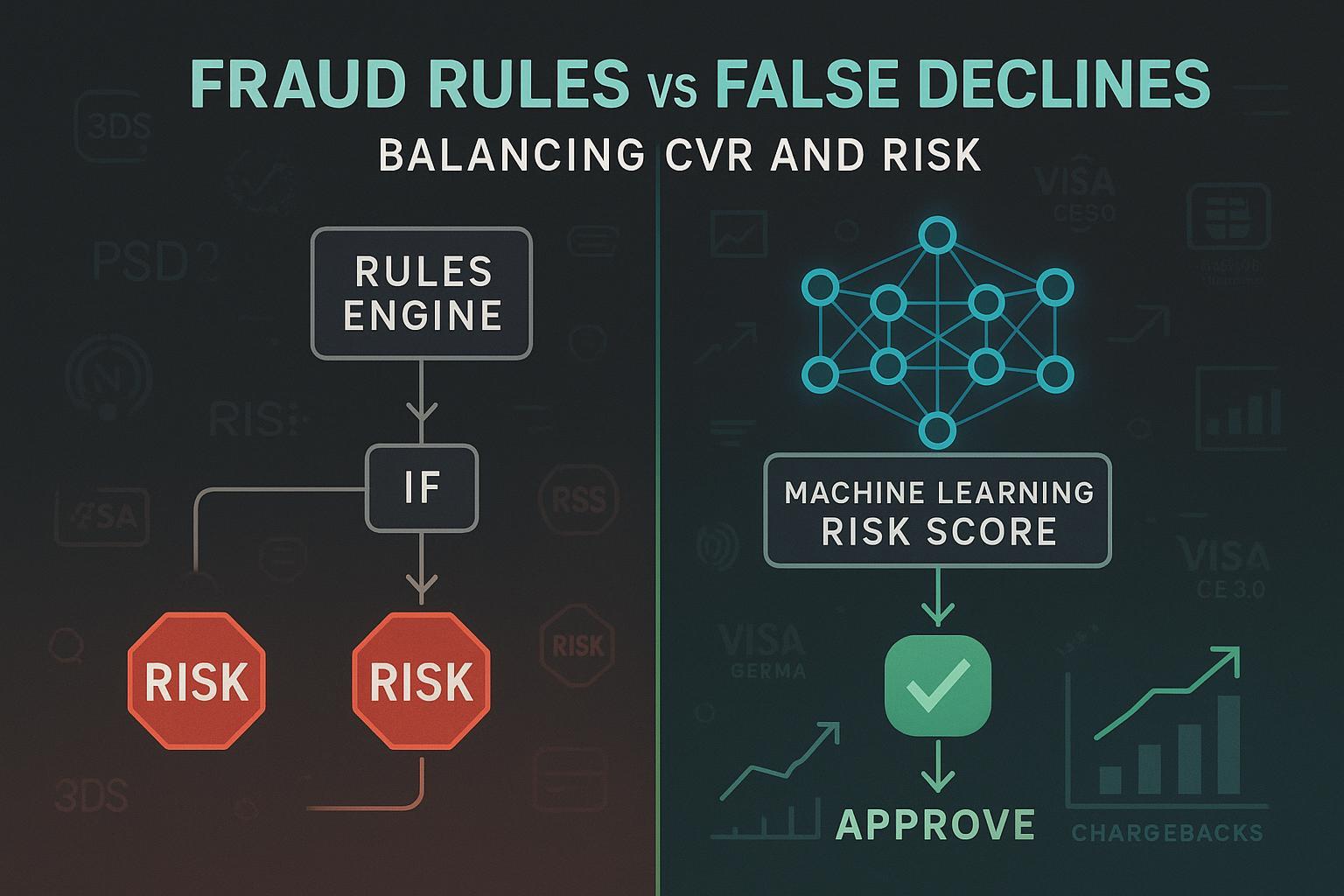

- Static rules struggle with precision/recall and require heavy tuning. ML risk scoring paired with orchestration (3DS, tokens, exemptions) generally cuts false positives and review load while holding fraud/chargebacks flat or down—trends reflected across 2023–2025 vendor case studies and merchant programs.

- 2025 levers matter: EMV 3DS 2.3.1 feature upgrades, PSD2 TRA limits (0.13%/€100, 0.06%/€250, 0.01%/€500), Visa CE 3.0 evidence rules, and network tokenization all improve approvals and reduce friction when applied selectively. See the official parameters in the EBA RTS and 2024 Q&A and EMVCo’s 2023 Annual Report (published 2024).

- Network tokens are a proven approval lever. Visa reported 10B+ tokens and a global average +6 bps authorization lift in 2024, expanding to 12.6B tokens by early 2025; issuers leveraging token data paired with ML have reported sizable lifts. See Visa 2024 tokenization update and the Visa 2025 Investor Day deck.

Who this is for

- Payments leaders and fraud/risk directors balancing approval rate, CVR, and chargebacks

- Enterprise product managers orchestrating 3DS, tokens, and risk step-up flows

- Finance leaders setting guardrails for fraud losses and dispute ratios

Why this matters now

- Chargeback volumes remain elevated into 2025, with friendly fraud a persistent share, pressuring dispute ratios and opex. See context in Mastercard’s 2025 discussion of chargeback costs.

The stakes in numbers

- False declines and hidden costs: Industry summaries estimate merchants reject ~6% of online orders and that a meaningful slice of declines are legitimate (2–10%), driving avoidable churn and lower LTV; see the concise framing by Carat/Fiserv on false declines alongside TrustDecision’s definition. A widely cited directional figure places 2023 global false-decline losses around $308B—presented by Nuvei’s overview; treat as directional given secondary sourcing.

- Program maturity matters: The Merchant Risk Council’s 2025 global report indicates distributions of false positive rates differ by maturity—more members report ≤2% false positives than non-members—underscoring the ROI of disciplined risk programs. See the MRC 2025 Global Fraud & Payments Report (summary page).

- Chargebacks trending: Industry briefs highlight rising dispute volumes and evolving rule programs in 2024–2025, reinforcing the need for proactive defenses and evidence readiness. For a succinct primer, see Chargeflow’s 2024 overview of reason code changes.

Rules vs. ML: what actually changes in CVR and risk

Top-line takeaways

- Rules-based controls are deterministic and transparent but brittle. They tend to over-block in ambiguous cases, inflating false declines and manual reviews.

- ML risk scoring improves precision and catch-rate at scale, enabling smarter step-up and selective friction. The payoff shows up as higher approvals/CVR and lower review opex while holding fraud losses within guardrails.

Comparison at a glance

| Dimension | Rules-based controls | ML risk-based decisioning |

|---|---|---|

| Detection precision/recall | High precision on narrow patterns; recall drops as fraud evolves | Learns complex signals; better balance of precision/recall on evolving patterns |

| Adaptability and drift | Requires frequent rule maintenance and QA | Continuous learning; monitor for drift with regular model refresh |

| CVR impact | Sensitive to tightness; false positives creep up under conservative strategies | Typically higher approvals via fewer false positives and smart step-up |

| False declines | Often elevated in edge cases; hard to tune without sacrificing catch | Directionally lower false positives vs. static rules in 2023–2025 merchant case studies |

| Chargeback control | Strong on known patterns; misses novel attacks | Strong on both known and novel patterns given rich features |

| Operational load | Rule tuning cycles; larger review queues | Lower review rates when thresholds and step-up are optimized |

| Peak/Black Friday scale | Rule chains can slow decisions; risk of blunt tightening | Scores in milliseconds; thresholds can flex for peak load |

| Compliance orchestration | Manual routing to 3DS/exemptions; risk of over-challenging | Automated step-up: 3DS only for mid/high risk or soft declines |

| Data requirements | Basic device/velocity checks | Deep device/IP, behavioral, issuer feedback, labels |

| Typical manual review rate | Can exceed 10%+ in immature programs | Top programs target ≤2–5% review; some report ~1–2% |

- Benchmarks and context: Industry analyses show top automation-led programs keeping manual review below 3–5%, with some ML-first stacks closer to ~1–2%, whereas broad merchant averages can be much higher. See the discussion and data slices in Sift’s 2024–2025 payment fraud insights.

2025 levers that move approval rate without inviting fraud

- EMV 3DS 2.3.1: lower friction, stronger auth

- What’s new: Support for Secure Payment Confirmation (SPC) using FIDO authenticators, improvements to out-of-band flows, updated SDKs—all aimed at fewer, smoother challenges and more issuer trust. See EMVCo’s 2023 Annual Report (published 2024) and the EMV 3DS overview.

- How to use it: Reserve challenges for medium/high risk or when issuers soft-decline; keep low-risk flows frictionless. Bind devices where possible to reduce abandonment.

- PSD2 TRA exemptions (EEA/UK): use every bps of headroom

- Thresholds: PSP/issuer fraud-rate limits set the max transaction amount you can exempt—0.13% up to €100; 0.06% up to €250; 0.01% up to €500—per the EBA Regulatory Technical Standards and 2024 Q&A. UK guidance aligns closely; see the FCA’s updated approach notes (Nov 2024) in the FCA guidance document.

- How to use it: Track fraud rates continuously; route eligible low-risk transactions under TRA while maintaining space for peak periods. Overages require pausing exemptions until rates recover.

- Visa Compelling Evidence 3.0 (CE 3.0): friendly-fraud defense

- What qualifies: Two prior, undisputed transactions 120–365 days before the disputed one, using the same payment method, plus two matching data elements (one must be IP or device ID/fingerprint). See Stripe’s CE 3.0 documentation and a practitioner-friendly breakdown by Chargebacks911.

- Outcome: When criteria are met, liability can shift, materially improving representment success across reason code 10.4.

- Network tokenization: approvals and stale-card fixes

- Evidence: Visa reported 10B tokens and a global average +6 bps authorization lift in 2024, expanding to 12.6B tokens by early 2025, with regional showcases (e.g., LAC) and issuer ML leveraging token data for substantial gains. See Visa’s 2024 tokenization release, the 2025 Investor Day deck, and the LAC token milestone. Mastercard also signals majority tokenized commerce on the horizon; see the 2024 investment presentation.

- How to use it: Prefer tokens for stored credentials and recurring; couple with account updater; measure lift by BIN, region, and use case.

Tactical playbooks

A) Minimal, high-signal rule set (and a sunset cadence)

- Start conservative but not blunt: velocity caps, clear CVV/AVS mismatches paired with high-risk IP/device anomalies, impossible travel, disposable email+new device+high AOV combinations.

- Quarterly hygiene: deprecate low-yield rules, consolidate duplicates, and A/B-test any new adds against approval-rate and chargeback guardrails.

B) ML thresholding and routing

- Segment by AOV and shopper type: returning, tokenized, device-bound customers should enjoy higher pass rates at a given score than brand-new, non-tokenized, cross-border attempts.

- Use three zones: pass (frictionless), step-up (3DS or manual review), block. Tune cutoffs per region and BIN cohort.

- Feedback loops: auto-ingest issuer responses, disputes, CE 3.0 outcomes; retrain monthly or as data volume permits.

C) Friction orchestration (3DS and exemptions)

- Default: frictionless for low-risk; step-up only for mid/high risk or soft declines. In EEA/UK, consume TRA headroom first before challenging.

- Device binding and SPC: where supported, reduce abandonment during 3DS by offering bank-app/OOB paths and FIDO-based confirmations.

D) Authorization optimization

- Tokens first for stored credentials; enable account updater. Monitor authorization-rate deltas and fraud by token vs PAN.

- Smart retries: for soft declines, retry with optimized parameters (issuer-preferred routing, local acquirers, timing windows); avoid shotgun retries.

E) Manual review strategy

- Cap review to 3–5% max of orders (often lower with mature ML). Prioritize high AOV, cross-border, and borderline scores.

- Equip reviewers with device, IP, BIN, historical footprint, delivery risk, and past dispute outcomes. Measure reviewer precision and queue ROI monthly; prune queues that don’t convert.

- Benchmark context and targets are discussed in Sift’s 2025 index on payment fraud.

F) CE 3.0 readiness checklist

- Capture and store device ID and IP; maintain consistent descriptor (first six characters stable).

- Maintain at least two prior clean transactions for repeat shoppers to qualify more disputes for CE 3.0. Implementation details are outlined in Stripe’s CE 3.0 docs and Chargebacks911’s overview.

Scenario-based recommendations

By AOV and margin

- Low AOV, high margin (fast fashion, digital content): • Bias for frictionless. Use ML scoring with lighter thresholds for returning/tokenized/device-bound customers. • Minimize 3DS challenges; reserve for clear risk signals or issuer soft-declines. • Lean on tokens to reduce stale PAN declines and increase issuer trust; see Visa’s tokenization data in the 2024 global update.

- High AOV, tighter margin (electronics, luxury, travel): • Tolerate more selective friction. Route mid-risk to 3DS or manual review; challenge more often cross-border. • Consider longer historical windows and more stringent device/IP checks before auto-approving.

By GMV stage

- < $5M GMV (early): • Start with a curated rule set and PSP-managed risk. Collect device/IP data now to enable CE 3.0 later. • Limit manual review; set simple guardrails (e.g., target <0.9% CNP chargeback ratio; initial approval target by BIN region).

- $5–50M GMV (scaling): • Deploy ML scoring; implement review queues; orchestrate step-up via 3DS selectively. • Adopt network tokens for stored credentials; measure approval lift per issuer.

-

$50M GMV (enterprise): • Multi-acquirer routing; full model monitoring; issuer data sharing initiatives; automated TRA and CE 3.0 pipelines.

By region

- EEA/UK (PSD2 SCA): • Use TRA exemptions aggressively within thresholds; keep low-risk flows frictionless. • Expect issuer variation in challenge tendencies; monitor soft-decline and challenge rates by market. The regulatory thresholds and expectations are detailed in the EBA RTS/Q&A 2024.

- US/CA/LatAm: • Prioritize authorization optimization: tokens, account updater, ML scoring, and smart retries. • Use 3DS selectively for high-risk or issuer-required scenarios; consult issuer behavior analyses like Stripe’s regional findings (US vs EEA differences) in Stripe’s 3DS analyses.

By vertical

- Fashion/marketplaces: high repeat rate; maximize tokenization and returning-customer pass bands.

- Electronics/luxury: emphasize device-binding and manual review on borderline cases.

- Travel/ticketing: higher fraud incentives and lead times; challenge more often cross-border; maintain robust CE 3.0 evidence.

- Subscriptions/SaaS: tokens + account updater crucial; monitor involuntary churn vs. fraud catch.

Measurement framework and guardrails

- Track the full funnel: pre-auth risk rejects; auth approvals; 3DS challenge, frictionless, and abandonment; post-auth fraud; chargebacks by reason code; manual review yield; CE 3.0 win rates.

- Guardrails to set by segment: target fraud loss in basis points, maximum chargeback ratio, minimum approval/CVR thresholds.

- Cohort and compare: new vs. returning; tokenized vs. PAN; device-bound vs. new device; domestic vs. cross-border; BIN-level issuer cohorts.

- Review SLAs: maintain fast turnaround for step-up queues to preserve CX during peak.

Persona checklists

- Risk/Fraud Director • Quarterly rule hygiene and sunset plan; model drift dashboard; reviewer precision report. • TRA headroom tracker (EEA/UK) and 3DS policy matrix by risk tier. • CE 3.0 readiness: device/IP capture rates; returning-shopper clean-history coverage.

- Payments PM • A/B tests for tokens vs. PAN, 3DS challenge thresholds, and retry strategies by BIN. • Routing experiments across acquirers/regions; step-up latency budgets.

- Finance/CFO • Balanced scorecard: approval rate, CVR, fraud loss bps, dispute ratio, review opex, CE 3.0 savings. • Budget for tokenization fees vs. measured authorization lift by cohort.

Pitfalls to avoid

- Over-challenging in EEA/UK when TRA headroom exists—wastes conversion. The thresholds and duties are spelled out in the EBA RTS and Q&A 2024.

- Static rules accretion—complexity grows while signal quality falls. Set a quarterly sunset ritual.

- “Set-and-forget” models—without fresh labels (issuer responses, disputes, CE 3.0 outcomes), thresholds drift and false positives rise.

- Ignoring tokens for stored credentials—missed easy authorization lift evidenced in Visa’s 2024–2025 tokenization disclosures.

FAQ

- Do I need both rules and ML? Yes. Use a minimal, high-signal rule set for compliance and obvious fraud; rely on ML for nuanced decisions and orchestration.

- What review rate should I target? Mature programs typically run 2–5% or less; some ML-first stacks operate around ~1–2%. See the directional benchmarks in Sift’s 2025 index.

- Is 3DS 2.3.1 mandatory? No; but the feature set (SPC, better OOB) reduces friction when you need to challenge. See EMVCo’s 2023 Annual Report (2024).

- How do I know if a decline is “false”? Measure downstream: shopper returns, successful retries, customer service contacts, and cohort LTV. Calibrate thresholds with A/B tests and reviewer precision audits.

Bottom line

- If you optimize for conversion without losing the plot on chargebacks, ML-first decisioning with disciplined orchestration (3DS, TRA, CE 3.0, and tokens) is the 2025 operating model. Start by setting guardrails, trimming your rule set, rolling out tokens, and tightening your feedback loops—then let data, not fear, decide where to add friction.