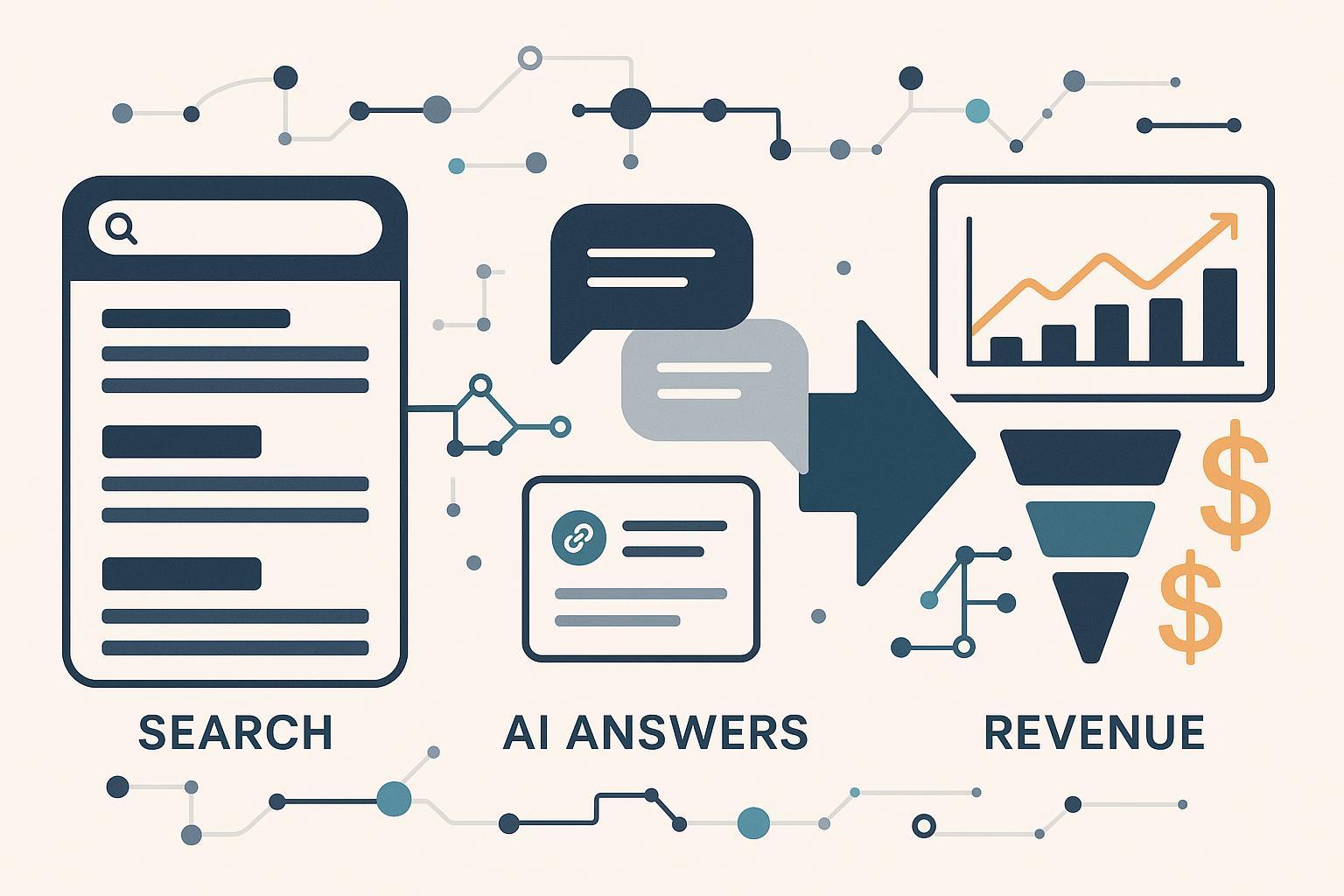

If you’re feeling the ground shift under your organic and paid programs, you’re not imagining it. AI Overviews, AI Mode, Bing Copilot answers, Perplexity, and ChatGPT Browse are resolving more intent inside their own interfaces. Clicks are decoupling from outcomes. You still need to prove revenue impact.

This guide gives you an operator-grade, evidence-backed blueprint to measure and influence revenue from zero‑click AI journeys—without hand‑waving. We’ll cover the landscape, what’s still measurable, a step‑by‑step instrumentation and modeling plan, experimentation patterns, executive reporting, and a playbook to get cited more often by AI answer engines.

1) The zero‑click reality—and why it matters

You can’t manage what you can’t measure. And increasingly, “the click” is missing. In 2024, the SparkToro/Datos panel found that about 58.5% of U.S. Google web searches ended with no click to any site, with the EU at 59.7%—more than half of queries resolving on‑SERP or via reformulations. See the breakdown in the 2024 zero‑click study by SparkToro. That’s a structural change in the data exhaust available to marketers.

Meanwhile, consumer and worker adoption of AI assistants keeps climbing. As of June 2025, Pew Research’s short read on ChatGPT usage reports that 34% of U.S. adults have used ChatGPT (roughly double 2023), with much higher penetration among under‑30s. These shifts explain why attribution models tied tightly to clickstreams are degrading.

For leaders, the implication is clear: you need a measurement architecture that connects exposure and influence from AI answer surfaces to pipeline and revenue—even when the referrer never arrives.

2) How AI Overviews and answer engines work (and cite)

Before you measure, understand the surfaces and their data footprints.

-

Google AI Overviews and AI Mode

- Google documents its AI features in Search, including AI Overviews (summarized answers with links) and AI Mode (a deeper, conversational experience). AI Overviews appear “when they’re particularly helpful,” and include prominent links as jump‑off points, according to the AI features overview on Google Search Central. Google states that links in AI Overviews can drive “more clicks than if the page had appeared as a traditional web listing” in some complex-query contexts, per the Google Marketing Live 2024 announcement. Google’s AI Mode update on the Search blog describes “query fan‑out,” where a single question spawns multiple sub‑queries to gather diverse sources.

- Google’s May 2025 guidance on “succeeding in AI Search” reinforces people‑first content, citation‑worthy sources, and technical clarity; see the Search Central update here: Succeeding in AI Search (May 2025). You can also control snippet behavior via robots meta directives such as nosnippet and max‑snippet, as documented in robots meta tag guidance.

- Ads may appear above/below and even within AI Overviews in clearly labeled sponsored sections; see the Google Ads Marketing Live 2024 post.

-

Microsoft Bing Copilot answers and citations

- Microsoft explains that when Copilot responses rely on Bing search, they include references and “Learn more” links to sources used for grounding. See Copilot in Bing: our approach to responsible AI (2024). For developers, Azure AI Foundry’s Bing Grounding tool specifies that responses include citations and a link to the Bing query, which “must be retained and displayed”; see Azure AI Foundry: Bing grounding (Aug 2025).

-

Perplexity and ChatGPT Browse

- Perplexity positions itself as real‑time, with inline citations and freshness controls; see its docs/changelog (evolving through 2024–2025). ChatGPT’s browsing features and Operator emphasize safety and source citations, though publisher controls (robots, referrers) are not comprehensively documented. Expect minimal consistent referrer data from these engines; plan for indirect signals.

Bottom line: Most AI answer experiences show citations, but their click‑through and referrer fidelity vary. You’ll need proxy signals and experiments to prove influence.

3) What you can still measure (and how)

You won’t get a neat “ai_overview” referrer. You can still build a defensible chain of evidence.

3.1 Collection layer: capture proxy signals and identity

- GA4 to BigQuery export

- Export event‑level data to your warehouse for modeling. GA4’s export creates daily

events_YYYYMMDDtables with event name, timestamp, user_pseudo_id, and nested params; see GA4 BigQuery basic queries and schemas.

- Export event‑level data to your warehouse for modeling. GA4’s export creates daily

- Server‑side tagging

- Stand up a GTM server‑side container to improve control and identity capture (with consent). Start with the GTM server‑side manual setup guide and Ads setup notes.

- Consent and privacy

- Implement Consent Mode v2 with the four core parameters (ad_storage, analytics_storage, ad_user_data, ad_personalization) and a certified CMP where required. See GA4 help on Consent Mode v2 and AdSense policy updates for EEA/UK and Switzerland deadlines (certified CMP requirement).

- Identity resolution groundwork

- Capture first‑party personID and hashed email (with consent) as user properties or in event params, so you can stitch across visits/devices later.

- Proxy exposure signals to log

- Branded query follow‑ups: spikes in brand + product queries after content becomes cited in AI Overviews/answer engines.

- Copy/share events: when visitors land and copy snippets, share links, or save pages (valuable “researcher” behavior).

- On‑site search refinements: queries containing the same terms as the AI surface or follow‑up intent.

- Manual AO coverage logs: date‑stamped records when your page is cited in AI Overviews (sampling methodology noted below).

In practice: add URL parameters to link shortlinks in public PR/news announcements about research you want cited; you’ll capture at least some downstream traffic with campaign IDs even when the first exposure was zero‑click.

3.2 Stitching layer: join session‑less journeys

- Build a person graph

- Use first‑party personID as the backbone; link hashed email, CRM ID, and device IDs where consented.

- Time‑window stitching

- Create “assist windows” around exposure proxies (e.g., AO citation date ±14 days) and connect them to surges in branded queries, direct visits, and conversions.

- Warehouse joins

- Join GA4 events tables to CRM opportunity tables (B2B) or order/line items (eCommerce). Normalize GA4 nested fields via views. Use UTC timestamps and standardized dimensions to avoid drift.

3.3 Modeling layer: from proxy to impact

- Assisted conversion modeling

- Attribute assists to exposure proxies. Example: weight conversions preceded by branded search surges within your assist window.

- Incrementality testing

- Run geo/time holdouts when new AI Overview coverage appears: compare regions/periods with and without coverage. Maintain switchbacks when feasible to reduce bias. This follows standard causal testing guidance also used in ad platforms and academic work.

- MMM with AI coverage as regressors

- Treat “AI answer coverage index” and “citation count” as external regressors in MMM. Tooling like Meta’s Robyn on GitHub and Google’s LightweightMMM supports adstock/saturation and external features. Validate MMM signals against holdouts before reallocating budget.

3.4 Reporting and decisioning

- KPIs to track

- Assisted revenue from AI surfaces, lift over baseline (geo/time), blended CAC/ROAS, coverage share in AI Overviews/answer engines, citation velocity, entity health.

- Dashboards

- A funnel storyboard: AI surfaces → branded queries → site visits → pipeline/revenue, with experiment overlays and MMM recommended allocations.

4) The blueprint: a step‑by‑step implementation

Use this sequence to stand up measurement in 4–8 weeks.

Step 1 — Instrumentation checklist (do this first)

- Governance and consent

- Implement Consent Mode v2, verify regional defaults, and integrate a certified CMP in EEA/UK/CH per AdSense CMP requirement.

- Identity and tagging

- Deploy GTM server‑side. Capture personID and hashed email (with consent) on key events (signup, newsletter, checkout). Configure GA4 user properties for IDs.

- Data export

- Enable GA4 → BigQuery export (daily). Validate

events_YYYYMMDDtable creation and partitioning.

- Enable GA4 → BigQuery export (daily). Validate

- Proxy signals

- Add on‑site search logging, copy/share events, and landing page intent fields (e.g., content_type, entity_topic).

- Coverage logging

- Stand up a lightweight “coverage log” table. Start a weekly sampling workflow to record when your pages appear in AI Overviews/Bing Copilot/Perplexity/ChatGPT answers, with query, date, and screenshot links.

Step 2 — Warehouse schema essentials

Recommended core tables (conceptual):

- ga4_events (unioned from events_*)

- person_identity (personID, hashed_email, crm_id, device_ids)

- branded_queries (date, query_text, impressions, clicks)

- ai_coverage_log (date, engine, query, url_cited, position, screenshot_url)

- crm_opportunities or ecommerce_orders (with line items)

- assists (derived: personID, assist_type, exposure_date, window_start, window_end)

Key derived views:

- sessions_unrolled (optional): sessionization for analysis convenience

- branded_lift_by_region: baseline vs change aligned to coverage events

- assisted_conversions: attribution weights based on exposure within windows

Step 3 — Modeling patterns (conceptual SQL/pseudocode)

-

Assisted conversions (windowed):

-- Pseudocode for assisted conversions from AI exposure windows WITH exposure AS ( SELECT personID, engine, MIN(date) AS first_exposure FROM ai_coverage_log acl JOIN ga4_events e ON e.page_location = acl.url_cited WHERE e.user_pseudo_id IS NOT NULL GROUP BY personID, engine ), windows AS ( SELECT personID, engine, DATE_SUB(first_exposure, INTERVAL 14 DAY) AS win_start, DATE_ADD(first_exposure, INTERVAL 14 DAY) AS win_end FROM exposure ), conv AS ( SELECT personID, event_timestamp, value_usd FROM ga4_events WHERE event_name IN ('purchase','generate_lead') ) SELECT engine, COUNT(DISTINCT personID) AS assisted_buyers, SUM(value_usd) AS assisted_revenue FROM conv c JOIN windows w ON c.personID = w.personID AND DATE(TIMESTAMP_MICROS(c.event_timestamp)) BETWEEN w.win_start AND w.win_end GROUP BY engine;Notes: tailor joins to your identity model; some orgs will associate personID post‑hoc via CRM email matches.

-

MMM setup (conceptual): Include weekly indices like

ai_coverage_index_google,citation_count_bing, andbranded_query_volumeas external regressors alongside media spend and seasonality. Use Robyn/LightweightMMM defaults for adstock and saturation, then validate model‑suggested lift with holdouts.

Step 4 — Dashboards for operators and execs

- Operator view

- Coverage share by engine, citation velocity, entity health, branded query lift, assisted revenue by engine and market.

- Executive view

- 28/90‑day lift vs baseline, assisted revenue trend, MMM allocation recommendations including “AI surfaces,” and cost efficiency (blended CAC/ROAS).

5) Getting cited: content and entity playbook

Your best lever is being the source AI engines want to cite.

- Google: entities, E‑E‑A‑T, structured data

- Align with helpful content and spam policies (Mar 2024 and Aug 2024 updates) that penalize scaled/low‑value pages and reward people‑first content; see March 2024 core update and spam policies and [August 2024 core update note](https://developers.google