As someone who has shipped real-time personalization in both eCommerce and enterprise SaaS over the last few years, I’ve learned that success is less about picking a flashy tool and more about rigor: clear SLOs, clean data contracts, and ruthless respect for destination limits. This playbook distills what consistently works in 2025 when you build on warehouse-native or reverse ETL stacks.

1) Start with a latency budget and a definition of “real-time”

“Real-time” means different things by channel and destination:

- Sub-second in-app recommendations or price/offer rendering (front-end + API) typically require streaming paths.

- 1–5 seconds for operational triggers (e.g., cart abandonment webhooks to a journey tool) are feasible with streaming ingestion to the warehouse and lightweight processing.

- 2–5 minutes for SaaS destinations that batch or rate-limit (many marketing tools) often require reverse ETL with incremental syncs.

Use empirical capabilities to set SLOs:

- For ingestion into your warehouse, modern streams can target single-digit seconds. Snowflake’s Snowpipe Streaming documents about “1 second” typical ingestion for standard tables, with Iceberg tables at ~30 seconds defaults, enabling near-real-time activation according to the 2024/2025 Snowflake docs (Snowflake Snowpipe Streaming docs, 2025; Snowflake blog on streaming pipelines, 2024).

- For stream processing and delivery guarantees, trade-offs matter: Confluent notes that at-least-once can be sub-100 ms E2E in tuned scenarios, while exactly-once can add significant overhead (tens of seconds to about a minute) (Confluent latency comparison, 2024; Confluent delivery guarantees, 2025).

- Some destinations are simply not real-time: public docs show multi-hour or daily update cadences in places. Segment documents P95 delivery guidance and partner-specific delays (e.g., several hours for some ad/analytics destinations) (Segment Event Delivery docs, 2025; Segment destination latency examples, 2025).

Write your latency budget per use case (e.g., “Homepage hero recommendation: <200 ms inference, <100 ms data fetch; marketing segment sync: <10 minutes end-to-end”). Everything else flows from this.

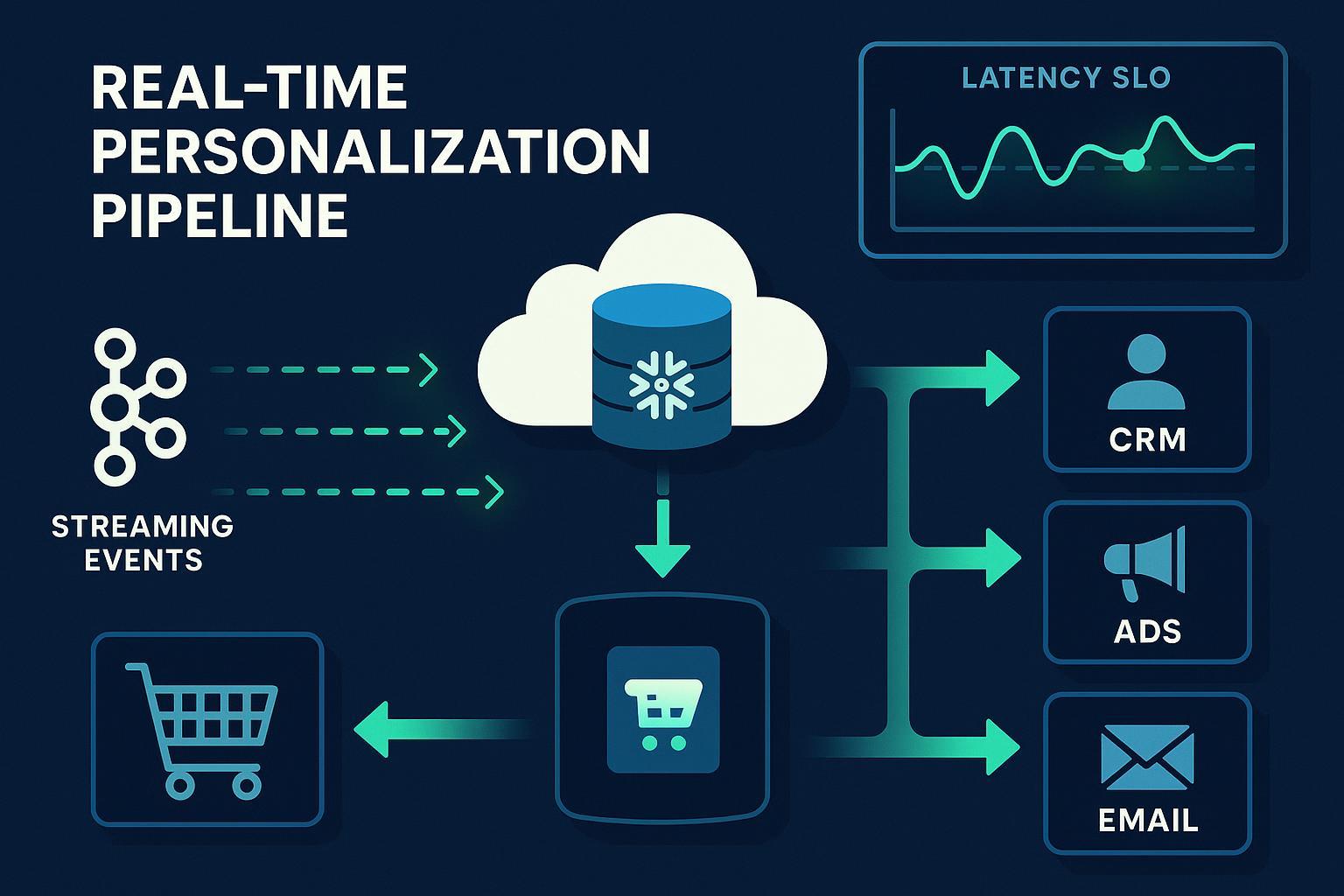

2) Choose the right activation path: warehouse-native, reverse ETL, or streaming

Here’s the decision framework I use:

- If the experience must render in-app in under 300 ms, use event streaming + online features and serve via an API. Keep the warehouse in the loop for governance and offline parity, but don’t block on it.

- If the destination expects profile-level updates and allows near-real-time writes, reverse ETL from a warehouse dynamic table or stream-backed view is ideal.

- If the destination is intrinsically batchy, accept reverse ETL on minute-to-hour cadences and design your journeys accordingly.

References worth reading: warehouse-native activation via “zero-copy” or composable CDP patterns and real-time reverse ETL with Kafka/dynamic tables are covered by vendors shipping these capabilities (Hightouch zero-copy CDP explainer, 2023; Census + Confluent real-time reverse ETL, 2024; Google Cloud on reverse ETL patterns, 2024).

Trade-offs to acknowledge:

- Warehouse-native activation centralizes governance and consistency, but your latency is bounded by ingestion and compute. Great for consistent segmentation across tools.

- Reverse ETL shines for operationalizing insights in CRMs/MAPs, but you inherit API rate limits, retries, and idempotency headaches.

- Streaming paths minimize latency but add operational complexity (schema evolution, DLQs, backpressure).

3) Get the data foundation right: ingestion, modeling, identity, consent

What consistently pays off:

- Ingestion and contracts

- Use CDC on operational stores (e.g., Debezium) to capture state changes with minimal lag; publish to Kafka/Confluent with schemas in Registry and idempotent writes.

- For warehouses, enable streaming ingestion with change tracking on event tables; Snowflake’s CHANGE_TRACKING plus serverless Alerts can drive low-latency actions without polling (Snowflake Alerts docs, 2025; Snowflake quickstart: Insights to action, 2024).

- Modeling with dbt

- Layered models: staging → intermediate → marts. Use incremental strategies, tests (freshness, uniqueness, not null), and docs. This keeps your activation tables reliable (dbt guidance for modern integration teams, 2025).

- Identity resolution

- Build a deterministic spine (customer_id, email, phone) with probabilistic stitching as a complement. Maintain a real-time identity graph with consent attributes. See identity guidance and cross-channel considerations in IAB materials and practitioner write-ups (IAB Tech Lab identity guidance, 2023/2024; Twilio Segment identity best practices, 2024).

- Consent capture and enforcement

- Integrate a TCF v2.2-compliant CMP, and enforce consent at collection and at activation. TCF v2.2 tightened rules on legal bases and transparency in 2024, which affects personalization flows (IAB Europe TCF v2.2 policies, 2024; IAB Europe explainer, 2024).

Implementation checklist: CDC enabled; schemas registered; Snowpipe Streaming on; CHANGE_TRACKING true; dbt tests green; identity graph materialized and updated continuously; consent stored with timestamps, purpose, vendor IDs.

4) Orchestrate with events, not cron

Move beyond scheduled jobs for anything “real-time-ish.” Patterns that hold up:

- Event-driven choreography: decouple producers and consumers via Kafka topics; enrich with Kafka Streams/ksqlDB; use dead-letter queues and backoff on failure. AWS and Confluent document these patterns well (AWS EventBridge patterns and best practices, 2024; Confluent delivery guarantees, 2025).

- Warehouse-native triggers: Snowflake serverless Alerts reacting to CHANGE_TRACKING or views allow you to trigger webhooks/workflows with minimal polling overhead (Snowflake Alerts docs, 2025; Snowflake quickstart: alert on events, 2024).

- Guardrails: implement idempotency keys, exactly-once where required, and compensating actions for destinations that don’t support transactional updates.

5) Choose your ML serving path deliberately

You’ll rarely want one size fits all. Use this split:

- In-warehouse ML for batch/near-real-time: Compute features and train with Snowpark ML or BigQuery ML; materialize “next best action” tables for activation. These approaches benefit from warehouse governance and reproducibility (Snowflake Feature Store overview, 2025; Google BigQuery ML docs, 2025).

- Online serving for in-app sub-300 ms: Maintain an online feature store or cached representation with strong online/offline parity. Feast/Tecton-like practices apply: versioned features, freshness SLAs, and parity tests. Keep inference close to the app/API.

- MLOps basics you cannot skip: CI/CD for models, data validation gates, drift monitoring, and rollback plans. Use simple guardrails and human review for high-impact decisions to align with 2025 AI governance expectations.

6) Keep omnichannel state consistent

Two rules make front-line teams happy and customers trust you:

- One identity, shared everywhere: maintain the identity graph as the single source of truth across web, app, email, ads, and support. Apply suppression logic to avoid duplicate or conflicting touches. Vendor and standards bodies highlight the need for cross-channel identity and consent synchronization (IAB Tech Lab identity guidance, 2023/2024).

- One offer logic, inventory-aware: for eCommerce, bind offers to inventory and logistics states to avoid recommending out-of-stock variants; for SaaS, bind upsell prompts to entitlement and usage. Salesforce documents omnichannel routing and inventory concepts you can mirror even if you’re not a Salesforce shop (Salesforce Omnichannel enablement, 2025; Salesforce B2C Omnichannel Inventory integration, 2025).

7) Privacy and AI governance you must operationalize in 2025

What changed or got teeth lately:

- Right to Erasure enforcement: European authorities launched a 2025 coordinated enforcement on RTBF, so your deletion and suppression pipelines must be lineage-aware and verifiable (EDPB coordinated enforcement on RTBF, 2025; EDPB Annual Report, 2024/2025).

- Cross-border transfers: The EU–U.S. Data Privacy Framework (adopted 2023) remains under review; implement SCCs and supplementary measures where appropriate and maintain TIAs (EDPB first review of EU–U.S. DPF, 2024; a refresher on Schrems II impacts via IAPP, 2020–ongoing analysis: IAPP Schrems II explainer).

- Consent and advertising: IAB TCF v2.2 tightened requirements, including removal of legitimate interest for certain personalization contexts; ensure your CMP emits and listens for TC string changes and that activation honors purpose-based gating (IAB Europe TCF v2.2 policies, 2024; IAB Tech Lab TCF overview, 2024).

- AI accountability: Anticipate EU AI Act obligations around risk assessments, transparency, bias mitigation, and human oversight for high-impact recommender systems. Even ahead of formal enforcement, adopt governance that documents model purpose, data lineage, monitoring, and sign-offs.

Operationalize with:

- A TCF v2.2-compliant CMP integrated with SDKs and server-side collectors

- Consent-aware schemas and activation filters

- Automated RTBF workflows that traverse warehouse, lake, feature store, and downstream tools (with deletion acknowledgments recorded)

- DPIAs for new personalization patterns and a defined human-in-the-loop review for high-risk journeys

8) Monitoring, SLOs, and cost controls

Make freshness and delivery visible:

- Ingestion SLOs: alert if Snowpipe Streaming freshness >5s on key tables; dashboard MAX_CLIENT_LAG and queue metrics (Snowflake Snowpipe Streaming docs, 2025).

- Stream processing: track end-to-end P95 and P99, DLQ volume, and retry counts; choose delivery guarantees intentionally based on business impact (Confluent guidance, 2024–2025 links above).

- Destination delivery: monitor partner-specific latencies and error codes. Segment’s Event Delivery docs provide a reference for thinking about P95 targets and known delays (Segment Event Delivery docs, 2025).

Money leaks to avoid:

- Compute bloat: use incremental materializations, micro-batches for non-critical paths, and scale-to-zero when idle.

- API/egress: coalesce updates, prefer bulk endpoints, and apply adaptive throttling with exponential backoff.

- Over-activation: send only what a destination needs; suppress unchanged attributes; use change data capture for diffs.

9) Tooling choice: matching needs to platforms

You can ship this with multiple combinations. Decision cues:

- Need sub-second streams into operational tools? Look for platforms that support event streaming or live syncs backed by Kafka/Snowflake dynamic tables (see Census’ write-up on “real-time reverse ETL” and Hightouch’s event streaming intros: Census real-time reverse ETL, 2024; Hightouch event streaming, 2024).

- Prefer warehouse governance and zero-copy? Review warehouse-native CDP patterns where activation reads directly from governed tables (Hightouch zero-copy CDP explainer, 2023).

- Hybrid/on-prem needs? Evaluate platforms with hybrid deployment options and governance toolkits (RudderStack outlines hybrid patterns: RudderStack data integration architecture, 2024).

- If you’re already deep on a CDP: consider built-in reverse ETL and identity features; Twilio documents its approach to reverse ETL and identity practices (Twilio/Segment reverse ETL primer, 2024).

Remember that costs and SLAs vary and many specifics are not public; validate with pilots and load tests.

10) Pitfalls I keep seeing (and fast fixes)

- Sync lag and drift: define freshness/error budgets per destination; implement DLQs; set replay procedures; verify idempotency at the destination by using upsert semantics and dedupe keys.

- Schema evolution breakages: use schema registry, contract testing, and versioned topics/tables; require backward-compatible changes and dbt tests at PR time.

- Identity fragmentation: enforce deterministic keys where possible; maintain confidence scores for probabilistic merges; add suppression for low-confidence links.

- API rate limits: batch and coalesce; adopt adaptive throttling; prefer bulk endpoints; if a destination lacks idempotency, build compensating transactions.

- Model drift and bias: track feature/label drift; set thresholds for auto-retraining; apply shadow deployments and rollback switches; involve humans for high-impact decisions.

- Compliance surprises: test RTBF end-to-end quarterly; log deletion acknowledgments; block activation when consent is missing or expired.

- Cost blowouts: right-size compute, cache aggressively for in-app decisions, and move cold attributes to slower paths.

11) A phased blueprint that actually ships

Phase 0: Define use cases and SLOs

- Document channels, destinations, and latency budgets. Agree on what “real-time” means per journey.

Phase 1: Lay the data and consent rails

- Enable CDC + Kafka; set up Snowpipe Streaming; implement CHANGE_TRACKING; adopt a TCF v2.2-compliant CMP; model core entities and events in dbt with tests.

Phase 2: Prove value with one high-visibility journey

- Example: PDP recommendations with sub-300 ms inference for eCommerce; or a SaaS expansion prompt tied to usage milestones.

- Build online features; validate identity, consent gating, and observability; run an A/B test.

Phase 3: Activate across destinations

- Add reverse ETL to CRM/MAP with incremental diffs; enforce idempotent upserts; monitor destination-specific P95.

Phase 4: Industrialize

- Integrate Snowflake Alerts for low-latency workflows; deploy drift monitoring; formalize DPIAs; cost guardrails; disaster recovery and replay playbooks.

Phase 5: Scale breadth and depth

- Expand segments and features; introduce multi-region considerations; continuously refine suppression logic and omnichannel consistency.

12) Measuring impact and closing the loop

Tie engineering SLOs to business KPIs. Vendor case studies suggest meaningful gains when personalization is executed well, though you should validate internally:

- McKinsey’s 2024 analysis links AI-driven personalization and campaign optimization with material revenue uplift in TMT sectors (McKinsey AI in TMT, 2024).

- Real-world eCommerce examples point to conversion/AOV lift when personalization is omnichannel and identity-aware (e.g., Shopify’s B2B personalization roundup shows concrete improvements across brands in 2023–2024) (Shopify enterprise B2B personalization, 2024).

Instrument experiments end-to-end: define success metrics (conversion, AOV, churn, expansion), align attribution windows with delivery cadences, and maintain a single source of truth for incremental lift.

Real-time personalization in 2025 isn’t magic; it’s disciplined plumbing plus thoughtful governance. Start with latency budgets, choose the right activation path per use case, operationalize consent and identity, and keep your observability and costs in check. If you hold that line, the rest—ML sophistication, channel breadth, ROI—follows naturally.