Stop guessing. This copy‑paste prompt library helps you rebuild a funnel from a plain list of event names, pinpoint where conversion breaks, and decide whether you’re looking at a tracking/attribution artifact or a real UX/technical issue—across GA4, Mixpanel, and Amplitude. First pass usually takes 30–60 minutes.

- What you need: A list of event names (CSV, top‑100 chart, or export). Optional: rough counts by day, device, or source.

- What you’ll get: A canonical funnel mapping, the first failing step, likely root causes, and a verification plan using built‑in debug tools.

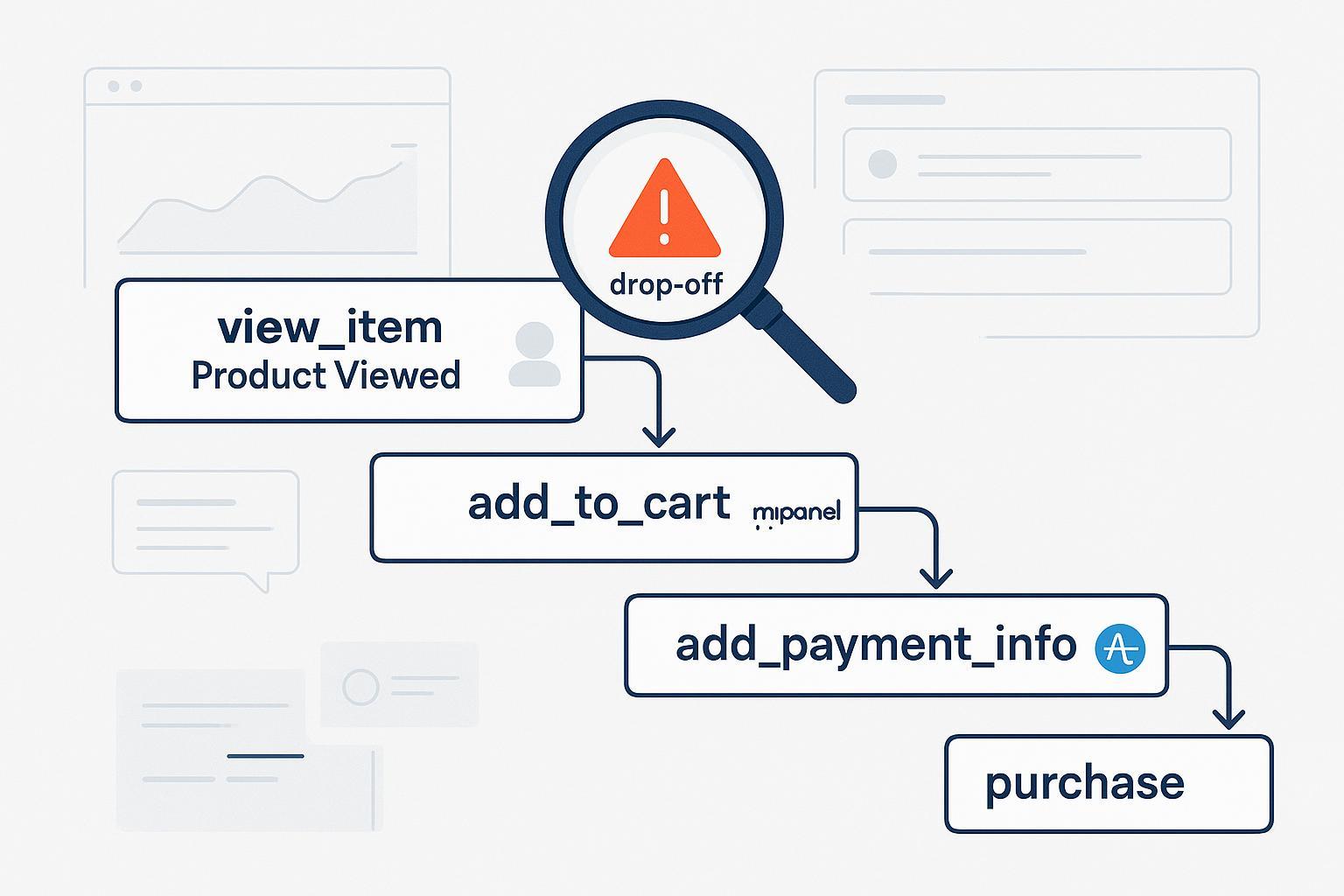

According to Google’s official documentation, ecommerce funnels in GA4 rely on recommended events like view_item, add_to_cart, begin_checkout, add_payment_info, and purchase, and downstream reports (like Checkout journey) depend on their correct sequencing and parameters (GA4 Help – Recommended Events; GA4 Help – Checkout journey report). Keep those anchors in mind as you work through prompts.

Preflight: 5‑Minute Sanity Checklist

Do these before analysis. They prevent false alarms and time sinks.

- Date range and seasonality

- Compare the same weekday/week window week‑over‑week and year‑over‑year (e.g., Mon‑Sun vs prior Mon‑Sun). Promotions, holidays, and campaigns can skew apparent drops.

- Data freshness and latency

- GA4 standard can lag up to 24–48 hours in processed reports; realtime/intraday is faster (GA4 Help – Data freshness, 2025). If your “drop” is in the last 24h, verify in Realtime.

- Attribution settings

- GA4 uses Data‑Driven Attribution by default; changes to attribution settings or lookback can reassign credit without a real conversion change (GA4 Help – Attribution settings). DDA thresholds and model behavior may affect reported numbers (GA4 Help – Attribute credit for key events).

- Consent mode and tag order

- With Consent Mode v2, misordered tags or late consent can suppress or reallocate conversions. Ensure your GA4 config fires before custom events and that consent is initialized early (e.g., GTM Consent Initialization) as discussed in the Bounteous “Top Consent Mode mistakes” (2025) and Simo Ahava’s Consent Mode v2 guide.

- Tool health check

- Confirm the property/Project IDs match; sample a live session with GA4 DebugView or Mixpanel/Amplitude Live View to ensure events are arriving (GA4 Help – DebugView; Mixpanel – Live View; Amplitude – Live View).

If anything above looks off, resolve it first. Many “drops” are reporting artifacts.

Quick Event Synonym Cheat Sheet (GA4 ↔ Mixpanel ↔ Amplitude)

| Funnel Stage | GA4 | Mixpanel (typical) | Amplitude (typical) |

|---|---|---|---|

| Product view | view_item | Product Viewed | View Item |

| Add to cart | add_to_cart | Added to Cart | Add to Cart |

| Begin checkout | begin_checkout | Checkout Started | Checkout Started |

| Payment info added | add_payment_info | Payment Info Added | Payment Info Entered |

| Purchase | purchase | Order Completed | Order Completed / Purchase Completed |

- GA4 names are standardized per GA4 Recommended Events.

- Mixpanel/Amplitude names vary; these are common patterns (see Mixpanel – Funnels quickstart and Amplitude – Select events).

How to Use These Prompts

- Paste into your LLM (GPT/Claude/Copilot). Provide your event list as-is; counts/properties optional.

- Each prompt lists Inputs and Expected Outputs. If you lack counts, the prompts infer likely issues from presence/absence and naming.

- Replace bracketed sections with your data. Keep prompts intact otherwise.

Prompt Pack 1 — Map Event Names to a Canonical eCommerce Funnel

Use this when you only have event names and need a clean funnel.

Role: Senior product analytics copilot

Task: Map my raw event names to a canonical ecommerce funnel.

Inputs:

- Event names (raw list): [paste your list]

- Platform(s): [GA4 / Mixpanel / Amplitude / unknown]

- Optional hints: [web/app, region, store type]

Instructions:

1) Normalize synonyms to the canonical steps: Product View → Add to Cart → Begin Checkout → Payment Info Added → Purchase.

2) If multiple candidates exist for a step, propose 1 primary and 1–2 alternates with rationale.

3) Flag missing critical steps and suggest likely names I should search for based on platform conventions.

4) Return:

- Funnel mapping (step → chosen event name)

- Alternates (if any)

- Missing steps + suggested search keywords

- Notes about potential misnaming (e.g., typos, past‑tense variants)

Output format: concise bullet list + a one‑line summary of confidence.

Tip: If your list lacks obvious checkout events, remember GA4’s recommended steps described in the GA4 ecommerce setup guide (Developers) and search for near‑matches (e.g., checkout_started vs begin_checkout).

Prompt Pack 2 — Locate the First Failing Step (With or Without Counts)

If you have counts, use Prompt A. If not, use Prompt B.

Prompt 2A: With Rough Counts

Role: Funnel triage analyst

Task: Find the first failing step based on relative changes.

Inputs:

- Date ranges: [current window] vs [comparison window]

- Funnel steps (mapped): [Product View: X, Add to Cart: Y, Begin Checkout: Z, Payment Info: A, Purchase: B]

- Counts per step for both windows: [paste table or bullets]

Instructions:

1) Compute step‑to‑step conversion for each window and the deltas.

2) Identify the first step with a statistically or practically significant drop (>= X% absolute or Y% relative; choose a reasonable heuristic if sample sizes are small).

3) Suggest top 3 likely causes: traffic/attribution, tracking/consent, UX/technical.

4) Return a short action list for verification (debug tools and checks).

Output: 5–8 bullets + a 3‑line action plan.

Prompt 2B: No Counts Available

Role: Funnel inference assistant

Task: Infer the most likely failing step using only event names.

Inputs:

- Event names present now: [paste]

- Event names present in “good” period (if available): [paste]

- Platform: [GA4/Mixpanel/Amplitude]

Instructions:

1) Compare presence/absence patterns to the canonical funnel.

2) If downstream steps exist but upstream are missing (e.g., Purchase present but Begin Checkout missing), hypothesize instrumentation gaps or alternate naming.

3) Rank the top 3 suspect steps with rationale and what to search for (alternate names, typos, case changes).

4) Provide a minimal next‑data request (e.g., “daily counts for 5 steps for last 14 days”).

Output: ranked suspects + 5 verification steps.

Why this works: Even without counts, absence/misalignment between expected sequential steps is a strong signal, consistent with general funnel reasoning recommended in analytics guides such as the Metabase funnel analysis overview and Adobe’s guided funnel analysis docs.

Prompt Pack 3 — Isolate by Segment (Device, Source, Country, Web/App)

Role: Segmentation sleuth

Task: Identify whether the drop is isolated to specific segments.

Inputs:

- Event names per segment (if available) OR global names + suspected segments

- Segments to test: [device (mobile/desktop), source/medium, campaign, country/region, web vs app]

Instructions:

1) Propose a minimal set of segment cuts most likely to reveal issues (start with device and source).

2) For each suggested cut, list signals that would indicate a localized failure (e.g., Add to Cart disappears only on mobile Safari).

3) Recommend the smallest additional data I should collect (e.g., counts for 5 steps split by device for 7 days).

4) Provide a short “if segment X fails then test Y” matrix.

Output: bullets + a compact decision matrix.

Why segments: Differences by device or source often reveal platform‑specific bugs or blocked scripts (e.g., consent banners behaving differently), a well‑established practice in funnel diagnostics (see the general guidance in VWO’s conversion leak playbooks).

Prompt Pack 4 — Tracking Integrity and Consent Mode Checks

Role: Tracking QA copilot

Task: Detect tracking gaps, misnaming, tag order issues, and Consent Mode pitfalls.

Inputs:

- Current event names: [paste]

- Recent changes (code deploys, CMP changes, GTM edits): [brief notes]

- Platform and tag manager: [GA4 + GTM / others]

Instructions:

1) Check for missing critical steps vs canonical funnel and propose likely alternate names.

2) List common misnaming patterns (snake_case vs camelCase, tense changes, pluralization) and how they map to expected steps.

3) Provide a debug plan:

- GA4: Use DebugView; verify event order and required parameters (transaction_id, items[]). Ensure GA4 config fires before custom events.

- GTM: Use Preview (Tag Assistant) to confirm triggers, dataLayer payloads, and Consent Initialization timing.

- Amplitude/Mixpanel: Use Live View; ensure consistent event names and key properties.

4) Highlight Consent Mode v2 tactics: early consent initialization, correct default state, and modeled data expectations.

Output: a step‑by‑step QA checklist you can follow live.

For background on consent and tag order effects, see the Bounteous 2025 guide to Consent Mode mistakes and Google’s Consent Mode help for Analytics. GA4 verification resources include DebugView and the Measurement Protocol verification guidance.

Prompt Pack 5 — Attribution Sanity: Real Drop or Reporting Reallocation?

Role: Attribution sense‑checker

Task: Determine if a conversion drop is due to attribution settings rather than true user behavior changes.

Inputs:

- Current attribution setting/model & lookback: [describe]

- Recent changes: [date + what changed]

- Channels impacted: [e.g., Paid Search, Organic, Direct]

Instructions:

1) Explain how switching to or from data‑driven attribution could reassign conversion credit across channels without changing total purchases.

2) Suggest a quick test: compare total purchases (order confirmations) vs channel‑assigned conversions pre/post change.

3) Recommend a consistent comparison window and lookback to minimize skew.

4) Provide a one‑pager summary I can send to stakeholders.

Output: brief explanation + test plan + stakeholder summary bullets.

GA4’s attribution setting nuances are documented in the GA4 attribution settings help (2024+) and considerations around thresholds/crediting are outlined in GA4 key events attribution credit. Independent practitioners also highlight common pitfalls (e.g., Napkyn’s attribution challenges in GA4).

Prompt Pack 6 — UX/Technical Failure Hypotheses and Fast Tests

Role: Checkout reliability first‑responder

Task: Propose likely UX/tech failures given the failing step and design fast tests.

Inputs:

- Failing step(s): [e.g., Payment Info Added → Purchase]

- Platform context: [web/app, payment provider, major recent changes]

Instructions:

1) List 5–7 high‑probability issues for the failing step (e.g., payment errors, third‑party script outages, form validation problems, performance regressions).

2) For each, give a 3‑step verification:

- How to reproduce

- What to check (logs/status pages/console errors)

- How to confirm with analytics debug tools

3) Suggest a short‑term mitigation (fallbacks, retries, rollbacks) and a long‑term fix.

Output: prioritized hypothesis list with verifiable steps.

Examples to guide hypotheses: payment failures and mitigation tactics are well‑covered by Stripe’s resources like the Failed payment recovery overview and general payment processing best practices. Performance slowdowns impacting checkout are captured in web performance guidance such as web.dev on improving LCP and Core Web Vitals strategies.

Worked Mini‑Example (Event Names Only)

Scenario: You have these GA4‑style names in your top events for last week: view_item, add_to_cart, add_payment_info, purchase. begin_checkout is missing.

- Using Prompt Pack 1, you’d map: Product View → view_item; Add to Cart → add_to_cart; Begin Checkout → missing; Payment Info → add_payment_info; Purchase → purchase.

- Using Prompt 2B, you’d infer: likely misnaming (checkout_started vs begin_checkout) or a removed step because payment info is present. Ask for “events matching ‘checkout’ added/edited in last 30 days” and search for synonyms.

- Using Prompt Pack 4, you’d debug in GA4 DebugView while running a real checkout to see if any event fires between add_to_cart and add_payment_info. If not, confirm whether your store theme/CMS changed the step.

Quick Triage Playbook (If X, Then Y)

| Symptom | Likely Cause | Next Action |

|---|---|---|

| Purchase down; all steps down proportionally | Traffic/seasonality | Validate date ranges; check campaigns and sitewide health; confirm attribution settings |

| Purchase down; Add to Cart normal; Begin Checkout drops | Checkout entry bug, consent banner blocking, paywall/test gate | Reproduce on mobile and Safari; check CMP and tag order; verify begin_checkout in DebugView |

| Payment Info → Purchase drops sharply | Payment gateway errors or latency | Test multiple card types; check processor status and error logs; monitor console errors |

| Only mobile affected | Responsive layout/script blocked on mobile | Run mobile device tests; check JS errors; consent/UI interactions on touch |

| Organic unaffected; Paid Search down | Attribution change or campaign issues | Compare total orders vs channel conversions; review Ads/attribution changes |

| Events missing since deploy | Misnamed/removed tags, tag firing order | Use GTM Preview; check recent PRs; compare event names pre/post commit |

The logic mirrors tool‑agnostic funnel best practices like those summarized in the Metabase funnel guide and UX prioritization playbooks referenced by VWO’s UX audit content.

Verification and Monitoring Checklist

Follow these steps to confirm a diagnosis and stabilize tracking.

- Live validation

- GA4: Open DebugView. Run a full test purchase. Verify event order and required parameters (e.g., unique transaction_id and items[]), as recommended in GA4 Developers’ verification guide.

- GTM: Use Preview/Debug (Tag Assistant). Confirm Consent Initialization fires first, then GA4 config, then event tags. Inspect dataLayer payloads for each step.

- Mixpanel: Use Live View and, if needed, enable JS SDK debug logging (

mixpanel.set_config({ debug: true })). - Amplitude: Use Live View and User Lookup for event sequences.

- Consent mode specifics

- Ensure consent is set early (CMP) and GA4 config isn’t delayed. Review modeling expectations in Google’s Consent Mode for Analytics and implementation pitfalls noted by Bounteous (2025).

- Attribution confirmation

- Check whether a recent attribution model or lookback change coincides with the reported drop, per GA4 attribution settings. Compare total orders (backend) vs channel‑assigned conversions to separate reporting shifts from real behavior.

- Processing lag guardrail

- If your “drop” is within the last 24–48 hours, confirm intraday/realtime vs processed reports as described in GA4 data freshness.

- Stabilize and alert

- Once fixed, add lightweight monitors:

- Daily counts for the 5 canonical steps (overall + by device).

- Alert when any step drops >30% day‑over‑day outside seasonality windows.

- Keep a simple synonyms registry for event names across tools; review after each deploy.

Copy‑Paste Worksheet: Minimal Data You Can Request (Optional)

If you can ask for one small export, ask for this:

For the last 14 days (daily): counts of these events by device

- Product View [view_item / Product Viewed / View Item]

- Add to Cart [add_to_cart / Added to Cart / Add to Cart]

- Begin Checkout [begin_checkout / Checkout Started]

- Payment Info Added [add_payment_info / Payment Info Added/Entered]

- Purchase [purchase / Order Completed]

Format: CSV with columns [date, device, step, count].

Even this tiny dataset will supercharge Prompt Pack 2A and 3.

Frequently Missed Details (Save Yourself an Hour)

- GA4 transaction_id must be unique. Duplicates inflate or deflate purchases depending on de‑dupe behavior; verify alongside items[] and currency/value (see GA4 ecommerce setup guide).

- GA4 Checkout journey needs the proper sequence (begin_checkout → add_shipping_info → add_payment_info → purchase); gaps reduce interpretability (GA4 Checkout journey help).

- Consent banners can fire late on specific browsers/regions. Test on mobile Safari and EU IPs.

- Mixpanel/Amplitude event names are free‑form; maintain a mapping sheet so the team uses consistent canonical names.

- GA4 processed reports lag; always cross‑check Realtime before escalating a “same‑day” drop (GA4 data freshness).

What Good Looks Like (End State)

- A maintained synonyms registry for your stack (GA4/Mixpanel/Amplitude), tied to the five canonical funnel steps.

- A short LLM prompt pack saved in your ops docs, ready for paste.

- A weekly QA ritual: one real checkout in DebugView/Live View, confirm parameters and order.

- Alerts on daily step counts with basic segmentation (device/source).

- A crisp playbook for attribution or consent changes, including stakeholder messaging and before/after comparisons.

References and Further Reading

- GA4 official events and eCommerce setup: see the GA4 Recommended Events and Developers’ ecommerce setup guide.

- Consent and tag order: review Bounteous’ 2025 Consent Mode mistakes and Simo Ahava’s v2 explainer.

- Attribution: align stakeholders using GA4 attribution settings and context like Napkyn’s GA4 attribution challenges.

- Funnel methods: revisit the Metabase funnel analysis guide and Adobe guided funnels overview.

- Performance and checkout reliability: start with web.dev’s LCP optimization guide and Stripe’s failed payment recovery 101.