If schema changes are inevitable, chaos is not. The teams that deprecate fields and evolve datasets without outages all do the same things well: they treat schemas like APIs, enforce compatibility contracts, roll out changes in parallel, validate automatically, and communicate like clockwork. This article distills what consistently works in practice across warehouses, lakehouses, and streaming systems.

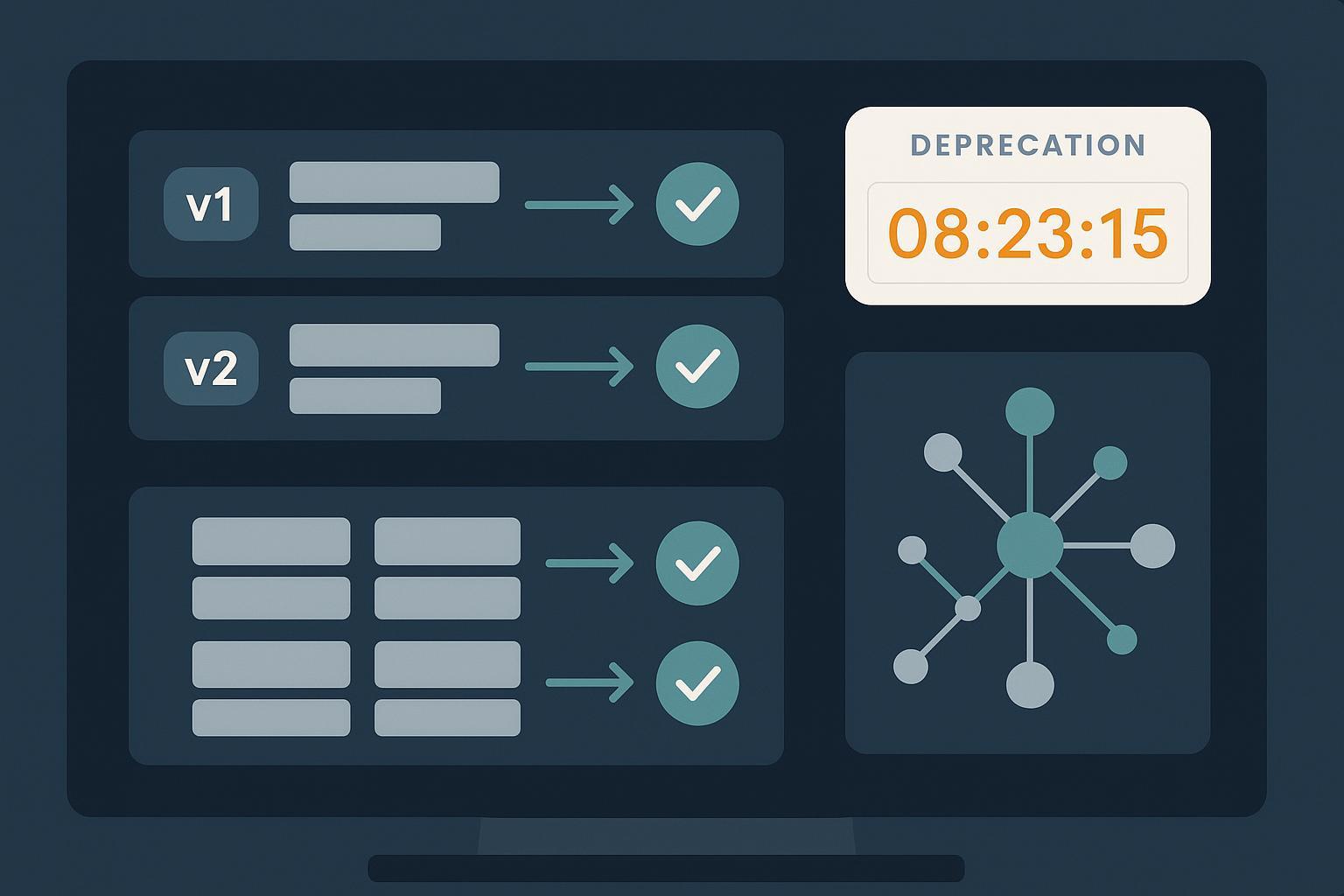

What “no chaos” looks like

- No surprise breakage in dashboards or ML jobs

- Clear deprecation windows with a documented migration path

- Dual-run or shadow validation before cutover

- Automated alerts for downstream consumers still on old versions

- A reversible plan and an audit trail of decisions

1) Make compatibility explicit: schemas are APIs

Use semantic versioning rules for datasets and events. In practice:

- Patch: Non-breaking additions (e.g., new nullable fields with defaults)

- Minor: Additive changes that consumers can safely ignore

- Major: Any breaking removals/renames or type changes

For streaming, encode guarantees with your schema registry. Confluent’s registry supports backward/forward/full, including transitive variants, which enforce compatibility against all prior versions. Read the exact behaviors in the Confluent Schema Registry compatibility modes documentation.

Format-specific rules matter:

- Avro. Adding fields with defaults is backward compatible; removing required fields is not; renames require aliases. The canonical rules are in the Avro specification: Schema Resolution and Aliases. Avro has no built-in “deprecated” flag, so use doc strings plus aliases.

- Protobuf. Avoid changing tags or types; instead add a new field, mark the old one deprecated, and reserve the tag/name to prevent reuse, per the Protocol Buffers best practices and the proto3 language specification.

When to branch a new major version (v2):

- You must rename or remove a widely used field without an acceptable alias/compat plan

- A type change can’t be made compatible by widening (e.g., string → struct)

- You’re consolidating logic requiring re-materialization at scale

Tip: In registries, prefer FULL_TRANSITIVE for long-lived topics with many consumers; it raises the bar for evolution but sharply reduces breakage. In warehouses, mirror this with versioned tables and a stable view (e.g., table_current) pointing to the active version.

2) A deprecation lifecycle that actually works

Treat deprecations like API lifecycle events. A repeatable pattern:

- Propose an RFC. Describe the schema diff, impact analysis, rollout and rollback, test plan, and timeline. Post it in a central place (Confluence/Notion) and solicit sign-off.

- Parallelize early. Materialize v2 in parallel (or dual-write) while keeping v1 read-only.

- Validate in shadow. Compare v1 vs. v2 outputs on the same inputs; define acceptable deltas (e.g., <0.5% aggregate deviation where expected).

- Default new consumers to v2. Freeze v1 for new work.

- Cut over with a switch. Update stable views and feature flags; keep v1 available for a fixed window.

- Sunset on schedule. Remove v1 after the window, reserving tags/aliases where relevant.

For a policy baseline, many teams adapt norms similar to the Kubernetes API deprecation policy, which emphasizes advance notice, explicit timelines, and documented migration paths.

3) Roll out safely: dual-run, canaries, and stable views

Shadow/dual-run is the most reliable way to avoid surprises: run v1 and v2 simultaneously and compare outputs before making v2 the default. Uber’s platform teams have publicly detailed dual-run migration mechanics in large-scale data moves, such as the Hive → Spark SQL ETL migration, highlighting validation before cutover.

Practical rollout patterns:

- Canary consumers. Move a small set of downstream jobs/dashboards to v2 first; monitor error rates and KPIs.

- Stable views. Maintain a “current” view that points to the active version, so dashboard SQL and ad-hoc queries have a single target.

- Feature flags. Drive BI and serving-layer cutovers via a flag, not a hard DDL switch, to enable instant rollback.

Warehouse/lakehouse specifics you should know:

- Delta Lake (Databricks). Enable schema evolution and use column mapping to safely rename columns; type widening is supported within documented limits. See the Databricks Delta schema evolution guide and protocol/release notes such as Databricks Runtime 13.3 LTS for rename prerequisites.

- Apache Iceberg. Field IDs make renames and reorders metadata-only and safe; see Iceberg schema evolution.

- Apache Hudi. Supports schema evolution with auto-evolution options; see Hudi schema evolution docs.

- BigQuery. You can add columns and relax REQUIRED → NULLABLE, but renames and many type changes require CTAS migration with views; refer to the BigQuery DDL reference.

- Snowflake. ALTER TABLE supports ADD/DROP/RENAME, but dropping/renaming can break dependent views; validate impacts. See Snowflake ALTER TABLE.

4) Automate validation and block risky changes

The fastest way to end schema-related incidents is to make breakage impossible to merge.

In transformation code:

- dbt model contracts and versions. Contracts enforce column presence/types; model versions let you operate v1 and v2 in parallel and control access. Start with dbt model versions and the governance guidance on model versions in mesh.

- Data quality gates. Add schema checks (column set, types, nullability) to CI.

- Great Expectations supports explicit schema expectations; see the Great Expectations schema expectations reference.

- SodaCL lets you assert required/forbidden columns and types, with alerts via Soda Cloud; see SodaCL schema checks.

At the platform boundary:

- Schema registry compatibility gates. Set compatibility (e.g., FULL_TRANSITIVE) to block incompatible schema updates in CI/CD as defined by the Confluent compatibility modes.

Observability and alerting:

- Automatically alert owners of downstream assets when a schema change lands or a deprecated version is still in use. Lineage-driven alerts keep focus on impacted consumers.

5) Lineage-first impact analysis

Before you deprecate, know exactly who you’ll affect.

- Open standards. Capture schema and column-level lineage using the OpenLineage SchemaDatasetFacet across orchestrators.

- Data catalog integration. Use DataHub (or commercial peers) to surface downstream dependencies and set schema assertions that detect column add/drop/type changes; see DataHub schema assertions.

With lineage in place, you can:

- Attach usage counts to fields (queries, jobs, owners) and focus communication

- Auto-generate migration work items for teams still on v1

- Produce a live “ready-to-sunset” checklist

6) Streaming schema specifics: Avro and Protobuf

Avro playbook:

- Additive change? Add a nullable field with a sensible default.

- Rename? Create a new field and add an alias from old to new in the writer/reader schemas per the Avro specification.

- Remove? Keep the field for one deprecation window with a default; update readers to ignore it; then remove in v2.

Protobuf playbook:

- Rename? Add a new field with a new tag; mark the old field deprecated; reserve the old tag and name to prevent reuse, following Protobuf best practices.

- Remove? Only after at least one full release cycle with the field deprecated and reserved.

- Oneofs and enums? Treat removed enum values as deprecated; prefer additive expansions; follow the proto3 specification.

Registry settings:

- Pick a compatibility mode that enforces your policy and blocks risky updates in CI; see Confluent’s compatibility modes.

7) Communication that prevents surprises

Technical work fails without communication discipline. A dependable cadence:

- T-90 days. Publish an RFC (context, schema diff, migration guide, timeline, rollback). Share lineage impact and a test dataset.

- T-60 days. Start dual-write/materialize v2; open office hours; nudge new consumers to v2.

- T-30 days. Default new work to v2; auto-email/Slack any owners still on v1 with usage evidence.

- T-7 days. Freeze v1 for new use; ship the final reminder.

- T+0. Cut over by switching the stable view/flag; monitor; keep rollback ready.

- T+30. Remove v1 (or mark read-only), publish a short post-implementation review.

Model your policy on well-understood API norms like the Kubernetes deprecation policy—announce early, document clearly, and keep a predictable window.

Compliance note: Deprecations are not just cleanliness—data minimization and storage limitation principles in GDPR Article 5 can require retiring unnecessary PII fields from analytics datasets.

8) Common pitfalls and how to avoid them

- Overly strict compatibility slows delivery. FULL_TRANSITIVE everywhere can block harmless changes. Apply stricter modes to long-lived, widely consumed datasets; allow more agility on internal or experimental ones.

- View abstraction debt. A single “current” view is powerful, but if you never remove old versions, costs and confusion rise. Enforce a sunset window.

- Streaming readers pinning schemas. Long-lived consumers can pin old schemas. Detect and notify via registry metrics and lineage; consider consumer-side compatibility tests.

- Warehouse-specific footguns. BigQuery lacks in-place rename; plan CTAS + view migration. Snowflake renames can break dependent views—scan dependencies first.

- Avro unions and type promotions. Be precise with union branch ordering and allowed promotions per the Avro Schema Resolution rules.

- “Silent” breaking derivations. Changing derivation logic without a schema change can still break expectations. Shadow-compare aggregates and critical metrics during dual-run.

9) A pragmatic 90‑day deprecation runbook

Day 0–10: Proposal

- Draft RFC with schema diff, justification, migration path, and rollback

- Run lineage impact; list owners of affected downstream assets

- Set registry compatibility and dbt model versioning plan

Day 11–30: Prepare and parallelize

- Materialize v2 or enable dual-write; backfill if needed

- Add dbt contracts and data quality checks (GX/Soda) in CI

- Create a stable view (table_current) pointing to v1; plan the cutover switch

- Publish deprecation notice with dates; provide sample queries and test datasets

Day 31–60: Validate and shift defaults

- Shadow-compare v1 vs. v2 outputs; investigate discrepancies

- Move canary consumers to v2; monitor KPI deltas and error budgets

- Default all new work to v2; freeze v1 for new use

- Auto-notify remaining v1 users weekly with usage evidence

Day 61–90: Cutover and sunset

- Cut over the stable view/flag to v2; keep rollback path warm

- Monitor for 1–2 weeks; resolve stragglers

- Remove v1 (or archive), reserve Protobuf tags, document Avro aliases

- Close out with a post-implementation note and update the changelog

10) Final checklist (print-ready)

Governance

- [ ] RFC approved with schema diff, timeline, rollback

- [ ] Registry compatibility mode configured (topics/events)

- [ ] dbt model versions and contracts defined

Parallelization & Validation

- [ ] v2 materialized or dual-write enabled; backfilled

- [ ] Shadow/dual-run comparisons defined and monitored

- [ ] Data quality checks (GX/Soda) pass in CI and prod

Impact & Communication

- [ ] Lineage report generated; owners notified

- [ ] Deprecation notice published with T‑dates and migration steps

- [ ] Canary consumers migrated; stable view/feature flag ready

Cutover & Sunset

- [ ] Cutover executed; monitoring green; rollback plan tested

- [ ] v1 frozen and then removed on schedule; tags/aliases handled

- [ ] Changelog and documentation updated; postmortem complete

References for deeper reading

- Schema compatibility and registries: Confluent Schema Registry compatibility modes

- Formats: Avro specification: Schema Resolution and Aliases, Protocol Buffers best practices

- Lakehouse/warehouse specifics: Databricks Delta schema evolution, Apache Iceberg schema evolution, Apache Hudi schema evolution, BigQuery DDL reference, Snowflake ALTER TABLE

- Transformation, quality, lineage: dbt model versions, Great Expectations schema expectations, SodaCL schema checks, OpenLineage SchemaDatasetFacet, DataHub schema assertions

- Policy and case studies: Kubernetes API deprecation policy, Uber’s Hive → Spark SQL migration, GDPR Article 5