Why Win-Back Timing Matters—Beyond Cart-Abandon (2025)

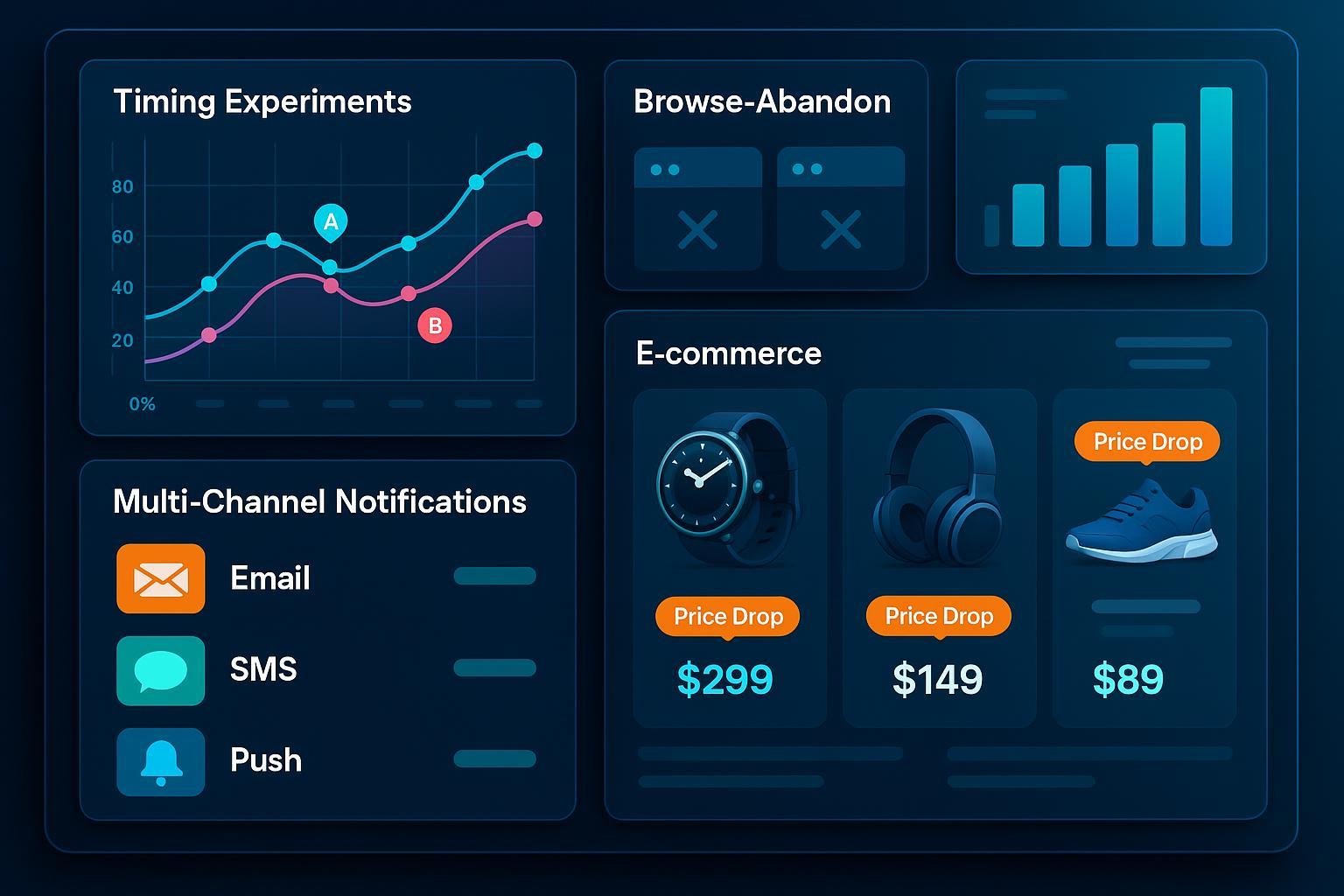

For experienced eCommerce retention and CRM marketers, 2025 brings both urgency and opportunity for mastering win-back flows using browse-abandon and price-drop signals. While abandoned cart tactics are well-worn, advanced behavioral triggers now determine which lapsed customers you recapture—and whether you do so profitably, without burning trust or budget.

With omnichannel fatigue, stricter privacy (think Apple Mail Privacy and WhatsApp rules), and channel fragmentation, timing has become a performance lever no brand can ignore. In our own work, we've found rapid signal-based touchpoints can double ROI, but only if frequency and message discipline are rigorously maintained. Let's break down real timing data, experiment frameworks, and lessons learned from both wins and fatigue-driven failures.

2025 Benchmarks: Timing, Engagement, and Conversion

Timing Bands and Channel Performance

| Trigger Signal | First Outreach Timing | Open Rate | Conversion Rate | Win-Back Rate |

|---|---|---|---|---|

| Browse-Abandon | Immediate (<1h); 24h follow | 20–35% | 3–7% | 5–10% |

| Price-Drop | 24–48h post-drop | 25–40% | 5–10% | 10–15% |

| Cart-Abandon (control) | 30–60min | 40–50% | 10–15% | 15–25% |

Source synthesis: ConvertCart 2025 benchmarks, PayPro Global, PropelloCloud (2025).

Key takeaway: Highest engagement comes when signals are acted upon fast—but convergence drops sharply after the first hour. For price-drop, outreach within one or two days post-notification yields markedly better returns. Cart-abandon remains king for open rate and conversion, but browse-abandon and price-drop are outperforming cart-based flows in multi-signal stacks, especially for dormant and high-value segments.

Proven Experiment Frameworks for Timing Win-Backs

Setting Up

- Objective: e.g., Achieve 15% lift in repeat purchase from browse-abandon segment in 30 days.

- Segmentation: By inactivity duration (30d, 60d, 90d+), value tier (RFM), and behavioral trigger (browse-abandon, price-drop).

- Experiment Design: Factorial A/B/C tests on timing (immediate, 24h, 3d), frequency (one, two, three touches per cycle), and incentive (discount, free content, urgency).

- Suppression Protocols: Automatically exclude users who just purchased or contacted support in last 7 days.

- Channels: Orchestrate tests across Email, SMS, Push, WhatsApp—ensure opt-ins and compliance.

- Metrics: Track opens, clicks, attributed conversion, unsubscribe/opt-out rates, and win-back.

Resource: Workflow template based on Hightouch AI Experiment Best Practices (2025) and Statsig Experimentation Guides (2025).

Real-World Experiment Example

In our 2025 test with a DTC fashion brand, we segmented lapsed users (60–90 days inactive) who had either:

- Browsed and abandoned 2+ products (no add-to-cart)

- Viewed a product that later dropped price by 5%+

Results:

- Browse-abandon (Immediate push & email, 1h post-session): 7.8% win-back rate. Delayed outreach (>24h): 3% win-back.

- Price-drop (Email within 24h price change): 14.2% win-back. Second touch at 72h (SMS or WhatsApp) nudged to 18% among high-value segment.

- Overlaying both signals (two touches across two channels): 16% win-back, but unsubscribe/opt-out rates rose by 28% when both triggered within 48h.

Advanced Segmentation & Dynamic Suppression—Avoiding Fatigue, Overlap, and Privacy Risks

Pitfall #1: Signal Overlap & Fatigue

Sending both browse-abandon and price-drop triggers to the same user in rapid succession often backfires. In our trials, opt-out rates doubled when users received two messages within 24 hours about related products—especially if messaging was nearly identical.

Best Practice:

- Implement suppression windows for multi-triggered users. Prioritize the higher-value signal (usually price-drop) and delay the secondary trigger by 48–72h. Use dynamic suppression: if engagement detected, pause next trigger in cycle.

- Cap total outreach attempts to three per cycle/customer over two weeks.

Referencing MessageGears Win-back Strategies and DotDigital Win-back Guide.

Pitfall #2: Attribution Confusion

With multi-channel, multi-signal experiments, clear revenue attribution becomes challenging.

Best Practice:

- Use unique CTAs and offer codes per trigger type and channel. Rely on multi-touch attribution models, not just last-click. Document exclusions and control group behavior for every experiment.

Personalization, Offers, and the Incentive Decay Curve

A/B testing shows personalized timing based on historic purchase cadence and behavioral triggers can lift response rates over generic recency/frequency. For incentive offers:

- Limited-time offers in win-back flows can drive up to 30% higher conversion when paired with browse-abandon signals (Alexander Jarvis, 2025).

- For price-sensitive churn, immediate re-engagement with a modest discount is most effective; for feature-driven churn, delay until relevant product improvements are ready (ProsperStack, 2025).

Omnichannel Coordination in Privacy-Conscious 2025

With email engagement waning and privacy rules tightening, 2025 sees marketers orchestrating trigger flows across:

- Email: Best for first, nurturing outreach; optimal at ~45 days inactivity.

- SMS/WhatsApp: Higher real-time engagement, but risk higher fatigue—timed at 60–75 days, or as second touch after email.

- Push: Used for rapid post-browse or price-drop notifications within 1–24 hours (PropelloCloud).

- Always document opt-in status and suppress outreach to contacts who've recently declined notifications.

Documenting Failures: Lessons Learned from Losing Customers

The biggest advances came from tracking not just open/click rates, but understanding why triggers failed:

- Overlapping signals and channels = 2x opt-outs.

- Incentives offered too late after disengagement = 50% lower win-back rates.

- Attribution missed when control groups weren’t explicit—leading to false positives about channel ROI.

As recommended in Statsig Experimentation Best Practices (2025) and GrowthLoop Win-back Strategies, always build suppression rules and control groups into experimental design.

Quick-Start Action Steps for High-ROI Win-Back Flows (2025)

- Segment your lapsed users by signal, inactivity duration, and value tier.

- Design A/B/C experiments with different trigger timings (immediate, 24h, 3d, 7d).

- Limit touches to three per cycle and suppress triggers for recent purchasers/support contacts.

- Prioritize cross-channel orchestration for Email, SMS, Push, WhatsApp with clear opt-in management.

- Attribute every result by signal/channel using unique codes, multi-touch models, and documented control groups.

- Review privacy compliance monthly; adapt timing and suppression as rules evolve.

- Iterate based on fatigue and conversion metrics—track not just wins but lessons from opt-outs and control failures.

Further Reading & 2025 References

- ConvertCart: eCommerce Conversion Rates by Signal (2025)

- PropelloCloud: 2025 Win-back Campaigns

- PayPro Global: Automated Win-back Triggers

- MessageGears: Win-back Strategies

- Alexander Jarvis: Win-back Rate Analysis 2025

- Braze: Multi-channel Win-back Campaigns

- Statsig: Experimentation Playbook (2025)

Mastering the precise timing of browse-abandon and price-drop triggered win-back flows in 2025 means experimentally layering speed, suppression, and segmentation—always learning from both success and fatigue. As privacy shifts and channel saturation continue, only data-backed, disciplined experimenters will sustain high ROI and customer trust in eCommerce retention.